David Wootton has sent me some responses to the accusations made by some of the reviewers of his book The Invention of Science, including me in Nature and Steven Poole in New Statesman, that he somewhat over-eggs the “science wars”/relativism arguments. Some other reviewers have suggested that these polemical sections of the book are referring to an academic turf war that doesn’t need to be awarded so much space here. In her review in the Guardian, Lorraine Daston commented that this material is “unlikely to be of interest to readers who are not historians of science over the age of 50.” Well, I plead guilty to the second at least, and so perhaps it isn’t surprising that those chapters most certainly were of interest to me. I might not agree with all of David’s arguments in the book, but I was very happy to see them. It is a discussion that still needs to happen, not least because “histories of science” like that of Steven Weinberg’s To Explain the World are still being put out into the public arena.

For that reason too, I’m delighted to post David’s responses here. I don’t exactly disagree with anything he says; I think the issues are at least partly a matter of interpretation. For example, in my review I commented that Steven Shapin and Simon Schaffer’s influential Leviathan and the Air-Pump (1985) doesn’t to my eye offer the kind of “hard relativist” perspective that David seems to find in it. In my original draft of the book review, I also said the same about David’s comments on Simon Schaffer’s article on prisms:

“I see no reason to believe, for example, that Schaffer really questions Newton’s compound theory of white light in his 1989 essay on prisms and the experimentum crucis, but just that he doubts the persuasiveness of Newton’s own experimental evidence.”

David seemed to say that Simon’s comments even implied he had doubts about the modern theory of optics and additive mixing; I can’t find grounds for reaching that conclusion. In my conversations with Simon, I have never had the slightest impression that he doesn’t regard science as a system of thought that offers a progressively more reliable description of the world. If he thinks it is no truer than witchcraft, he hides it extraordinarily well.

As further evidence of S&S’s relativism, David quotes from Leviathan and the Air-Pump, which, he says, maintains that the success of experimental science depended on its proponents’ “political success ... in insinuating themselves into the activities of other institutions and other interest groups. He who has the most, and the most powerful, allies wins.” When I first read this (in preparing my book Curiosity), it never once occurred to me that S&S meant it as some kind of statement to the effect that we only think Boyle’s law is correct because Boyle was more politically astute than his opponents. I took it to mean that Boyle was able to gain rapid acceptance of his ideas because he was politically well situated (central to the Royal Society, for example) and canny with his rhetoric. It seemed to me that the reception of scientific ideas when they first appear surely is, both then and now, conditioned by social factors. It surely is the case that some such ideas, though they might indeed now be revealed as superior to the alternatives, were more quickly taken up at the time not just (or even) because they were more convincing or better supported by evidence but because of the way their advocates were able to corner the market or rewrite the discourse in their favour. Lavoisier’s “new chemistry” is the obvious example. Indeed, David recognizes that social aspects of scientific debate in his book, which is one of its many strengths. I certainly don’t think Simon would argue that scientific ideas might then stay fixed for hundreds of years simply because their initial proponents gained the upper hand in the cut and thrust of debate.

David says that Steven Shapin does betray an affiliation to extreme relativism, however – and he cites as evidence Shapin’s comment in his (unsurprisingly damning) review of the Weinberg book:

“Science remains almost unique in that respect. It’s modernity’s reality-defining enterprise, a pattern of proper knowledge and of right thinking, in the same way that—though Mr. Weinberg will hate the allusion—Christian religion once defined what the world was like and what proper knowledge should be.”

This is a complicated claim, and I would like to know more about what Shapin meant by it. Perhaps I will ask him. I can see why David might interpret it as a statement to the effect that the scientific theory of the origin of the universe is no more “true” than the account given in Genesis. And I think he is right to point out that Shapin should be alert to the possibility of that interpretation. But I think one can also interpret the remark as saying that we should be as wary of scientism – the idea that the only knowledge that counts as proper knowledge is scientific – as we should be of the doctrinaire Christianity that once pervaded Western thought, which was once the jury before which all ideas were to be scrutinized. Christian theology was certainly regarded at times as a superior arbiter to pre-scientific rationalism in efforts to understand the universe – for example in the 1277 Condemnation that pitched Aristotelian natural history against the Church). But just as Christianity was finally compelled to stay within the proper limits of its authority (in most parts of the civilized Christian world, if not perhaps Kansas), so should we make sure that science does so: it is the best method we have for understanding the physical world, but not the yardstick for all “proper knowledge”. I hope this is what Shapin means, but I confess that I cannot be sure.

The real problem here – and it is one that David rightly complains about – is not so much excessive relativism in the academic study of the history of science, but what he calls a conspiracy of silence within that discipline. It seems to have become taboo to say that scientific knowledge goes through a reliability filter that makes it rather dependable, predictive and amenable to improvement – even if you believe that to be the case. As a historian of science, David must be regularly faced with disapproving frowns and tuts if he wishes to express value judgements about scientific ideas, because this seems to have become bad form and now to be rather rigidly policed in some quarters.

I have experienced this myself, when a publisher’s reviewer of my book Invisible evidently felt it his/her duty to scour it for the slightest taint of presentism – and, when he/she decided it had been detected, to reel out what was obviously a pre-prepared little spiel to that effect. For example, I was sternly told that

“Hooke and Leeuwenhoek did not "in fact" see "single-celled organisms called protozoa". They also did not drive modern cars, neither did they long for a new iphone.”

This is of course just silly (not to say rather incoherent) academic Gotcha-style point-scoring. What I wrote was “It was Leeuwenhoek’s discoveries of invisibly small ‘animals’ – he was in fact seeing large bacteria and single-celled organisms called protozoa – in 1676…” Outrageous, huh?

Then I got some nonsense about "Great Men" histories because I had the temerity to mention that Pasteur and Koch did some important work on germ theory. The reviewer’s terror of making what his/her colleagues would regard as a disciplinary faux pas seems to be preventing him/her from being able to actually tell any history.

The situation in that case became clear enough when the reviewer finally complained that it was hard to judge my argument because what he/she needed was “a clear statement of the author's intent and theoretical position” – followed by “rewriting the whole text in such a way that the author clearly articulates his chosen positions throughout.” To which I’m afraid I replied: “What is my “theoretical position”? It’s in the text, not in some badge that I choose to display at the outset. The persistent misreading of the text to force it into one camp or another [and the cognitive dissonance evident when it doesn’t quite fit] seems to highlight a pretty serious problem with the academic approach, for all that I benefit from it shamelessly.”

So perhaps David will understand (I suspect he does already) that I have considerable sympathy with his predicament. I just wonder if his frustration (like mine) leaked out a little too much. I don’t know if he is right to say that “The [Oxford] faculty, as a group of professional historians, feels it must ward off anyone interested in studying science as a project that succeeds and makes progress, and at the same time encourage anyone who wants to study science as a purely social enterprise” – and if he is, that doesn’t seem terribly healthy. But the job advert he quotes doesn’t seem to me to deny the possibility of progress, but simply to point out that the primary job of the historian is not to sift the past for nuggets of the present.

Which of course brings me to Weinberg. He apparently wants to reshape the history of science, although his response to critics in the NYRB makes me more sympathetic to the sincerity, if not to the value, of his programme. I wonder if we might get a little clearer about the issues here by considering how one might wish to, say, write about medieval and early modern witchcraft. I wonder if what David sees as an unconscionable silence from historians on the veracity and validity of witchcraft is more a matter of historians thinking that, in the 21st century, one should not feel obliged to begin a paper or a book with a statement along the lines of

“I must point out that witchcraft is not a very effective way to understand the world, and if you wish to make a flying device, you will be far better advised to use the modern theory of fluid mechanics.”

On the other hand, if said author were to be quizzed along the lines of “But does witchcraft make broomsticks fly?”, it would be intellectually feeble, indeed derelict, to respond “That’s not the issue I am addressing, and I do not propose to comment on it.” David implies that this happens; I suspect he is right, though I do not know how often. There doesn’t seem to be anything sacrificed by saying instead something like: “Of course, witchcraft will not summon demons and make people fly. Now let me get on with talking about it.”

The Weinberg position, on the other hand, seems to be along the lines of “By all means study witchcraft as history, if you like, but as far as science is concerned we should make it absolutely clear that it was just superstitious nonsense that got in the way of true progress.” To which, of course, the historian might want to say “But Robert Boyle believed that demons exist and could be summoned!” The Weinbergian (I don’t want to put words into his own mouth) might respond, “Well Boyle wasn’t perfect and he believed some pretty daft things – like alchemical transmutation.”

And at that point I say “You really don’t give a toss what Robert Boyle thought, do you? You just want to mark his homework.” But I do give a toss, and not just because Boyle was an interesting thinker, or because I don’t have any illusion that we are smarter today than people were in the seventeenth century. I want to take seriously what Boyle thought and why, because it is a part of how ideas have developed, and because I don’t believe the history of science was a process of gradually shaking off delusions and misapprehensions and refining our rationality. It is much messier than that, now and always. If your starting position in assessing Boyle’s belief in demons and alchemy is that he was sometimes a bit gullible and deluded, then you are simply not going to get much of a grasp of what or how he thought. (Boyle was somewhat gullible when it came to alchemical charlatans, but his belief in transmutation wasn’t a part of that credulity.)

My own position is more along the lines of “It’s interesting that people once believed in witchcraft. I wonder what sustained that belief, and how it interacted with emerging ideas about science?” I am not being disingenuous if I say that I am inevitably a naïve reader of Shapin, Schaffer, Daston, Fara, and indeed David Wootton. But I find this same spirit in all of their books, and that’s what I appreciate in them.

Comments from David Wootton

A number of the reviews of The Invention of Science have expressed puzzlement that my book opens and closes with extensive historiographical, methodological, and philosophical discussions. Why not just leave all that stuff out? The charge is that I am refighting the Science Wars of the 1990s when everyone else has moved on. I under- stand why people would think this, but, with respect, I think they are wrong. Let’s break down the issues as follows:

1) Are relativists still confident that they speak for the history of science profession? Yes they are. See for example Steven Shapin’s breathtaking review of Steven Weinberg in the Wall Street Journal, where Shapin actually presents belief in science as being strictly comparable to belief in Christianity (http://goo.gl/qULelt) [1]. Or see Shapin’s and Schaffer’s introduction to the anniversary edition of Leviathan and the Air Pump (2011). Or see Peter Dear’s “Historiography of Not-So-Recent Science”, History of Science 50 (2012), 197-211 (“we are all post- modernists now”).

2) Are students still taught from relativist textbooks? Yes they are. The key text- books are Shapin’s Scientific Revolution (1996; now translated into seventeen languages); Peter Dear’s Revolutionizing the Sciences (2001, revised in 2009); John Henry’s The Scientific Revolution (1997, with later revisions). This may change – there is Principe’s Very Short Introduction (2011), for example – but it hasn’t changed yet.

3) Has the profession moved on? Rather than moving on, it has decided to pretend the Science Wars never happened, and as a consequence it is stuck in a rut, incapable of generating a new account of what was happening in science in the early modern period. To quote Lorraine Daston’s 2009 essay on the present state of the discipline (http://goo.gl/rMEAiy), what historians have produced is “a swarm of microhistories ... archivally based and narrated in exquisite detail.” These microhistories, as she herself acknowledges, do not enable one to put together a bigger picture. The resulting confusion is embodied, for example, in David Knight’s Voyaging in Strange Seas: the Great Revolution in Science (Yale, 2014).

4) Are the relativists more moderate than I maintain? Philip Ball thinks I and the authors of Leviathan and the Air Pump have more in common than I imagine. I doubt Shapin and Schaffer will think so, and I suggest Philip rereads p. 342 of that book, which maintains that the success of experimental science depended on its proponents’ “political success ... in insinuating themselves into the activities of other institutions and other interest groups. He who has the most, and the most powerful, allies wins.” In this sort of story the evidence counts for nothing – indeed, the strong programme insists that the evidence must count for nothing (and note the introduction of the strong programme’s key principle of symmetry on p. 5)[2].

5) Can you separate methodology and historiography from substantive history? It’s very difficult to do so, because your methodology and the previous history of your discipline shape the questions you ask and the answers you give. Thus relat- ivist historiography has privileged controversy studies (http://goo.gl/uVfxFF), and simply ignored cases where new scientific claims have been accepted without dispute. Indeed if the Duhem-Quine thesis were right there are always grounds for dispute when new evidence is presented. I don’t see how one can discuss the collapse of Ptolemaic astronomy in the years immediately after 1610 without acknowledging that this is an event which has been invisible to previous historians because they have been unwilling to acknowledge that an empirical fact (the phases of Venus) could be decisive in determining the fate of a well- established theory — in a case like this it is not the evidence that is new, but the questions that are being asked of it, and these are inseparable from issues of methodology and historiography [3].

6) The Economist thinks I have a disagreement with a few “callow” relativists. Odd that these insignificant people hold chairs in Harvard, Cambridge, Oxford, Edinburgh, Cornell. But there is a much bigger point here: a fundamental claim made by my opponents is that historians are committed, in principle, to treating bad and good knowledge identically. The historical profession tends to agree with them (see for example Gordon Wood’s NYRB essay on medicine in the American Revolution, http://goo.gl/ZoFuMu: “The problem is most historians are relativists”).

The consequences are apparent in the Cambridge History of Science, vol. 3, ed. Park and Daston (2006), which contains a twenty page chapter on “Coffee Houses and Print Shops” (as part of a two hundred page section on “Personae- and Sites of Natural Knowledge”) and others equally long on “Astrology” and “Magic” (Astrology gets twenty pages while Astronomy gets thirty), but, despite being 850 pages long, contains no extended discussion of Digges, Stevin, Gilbert, or Pascal, nothing on magnets, and only two pages on vacuum experiments [4].

It is also apparent in Oxford University’s recent (April 2015) advertisement for its Chair in the History of Science which stated: “The professor will share the faculty’s vision of the scope of the history of science, which is less focused on the history of scientific truth and more interested in reconstructing the practices of science, and the claims to science-based authority within given societies at given times” [5]. The Oxford Faculty of History does not declare its vision of the scope of the discipline when advertising its chair in, say, military history. But the history of science is different. The faculty, as a group of professional historians, feels it must ward off anyone interested in studying science as a project that succeeds and makes progress, and at the same time encourage anyone who wants to study science as a purely social enterprise. What interests them is not scientific knowledge but the authority claimed by “scientists” — be they alchemists or phrenologists. What’s at stake here is not just the history of science, but also the claim, made over and over again by historians, that the past must be studied solely in its own terms — an approach which may lead to understanding, but cannot lead to explanation. So historians of witchcraft report encounters with devils as if the devils were real — and never ask what’s really going on.

7) What is science? I was dismayed to discover that students in my own university were being taught (by someone with a new PhD in history of science from a prestigious institution) that there was no such thing as science in the seventeenth century. But this, after all, is what Henry’s textbook says, and Dear in his 2012 review essay confidently asserts: “specialist historians seem increasingly agreed that science as we now know it is an endeavour born of the nineteenth century.” On her university website one distinguished historian of science is described thus: “Paula Findlen teaches history of science before it was ‘science’ (which is, after all, a nineteenth-century word).” (http://web.stanford.edu/ dept/HPS/findlen.html, accessed 7 Dec 2015). How have we got to the point where it appears to make sense to claim that “science” is a nineteenth-century word? Because Newton, we are told, was not a scientist (which indeed is a nineteenth-century word) but a philosopher. Even if one charitably rephrases Findlen’s statement (or the statement made on her behalf) to read “‘science’ as we currently use the term is a nineteenth-century concept” it would be wrong unless, by a circular argument, one insists that earlier usages of the word can’t possibly have meant by science what we mean by science. The whole point of my book is to show that by the end of the seventeenth century “science” (as Dryden called it) really was science as we understand the term. To unpick the miscon- ception that there was no science in the seventeenth century you have to look at the history of words like “science” and “scientist” (noting, for example, the founding of the French Académie des Sciences in 1666), but also at an historiographical tradition which has insisted that what we think of as science is just a temporary and arbitrary social practice, like metaphysical poetry or Methodism, not an enduring and self-sustaining body of reliable knowledge.

8) What would have happened if I had left out the methodological and historiographical debates? I tried the alternative approach, of writing in layperson’s terms for commonsensical people, first. Just look at how my book Bad Medicine was treated by Steven Shapin, in the pages of the London Review of Books: http:/ /goo.gl/aA67fr! The book was a success in that lots of people read it and liked it, many of them doctors (see www.badmedicine.co.uk); but historians of medicine brushed it off. So this time I have felt obliged to address the core arguments which supposedly justify ignoring progress — the arguments that have bamboozled the profession for the last fifty years — in the hope of being taken a little more seriously, not by sensible people (who can’t understand why I don’t just cut to the chase), but by the professionals who think that the history of science is like cardiac surgery — not something “the laity” (Shapin’s peculiar term) can possible participate in, understand, or criticise, but something for the professionals alone. In trying to address this new clerisy I have evidently tried the patience of some of my more sensible, level-headed readers. That’s unfortunate and a matter of considerable regret: but if the way in which history of science is taught in the universities is to change, someone must take on the experts on their own ground, and someone must question the notion that the history of science ought not to concern itself with (amongst much else) the history of scientific truth. By all means skip the beginning and concluding chapters if you have no interest in how the history of science (and history more generally) is taught; but please read them carefully if you do.

Notes

[1] There is a paywall: to surmount it google “Why Scientists Shouldn’t Write History” and click on the first link. For a discussion see http://goo.gl/VYNVhX. I am grateful to Philip Ball for acknowledging that my book is very different in character from Weinberg’s, which saves me from having to stress the point.

[2] Patricia Fara thinks that social constructivism is “the idea that what people believe to be true is affected by their cultural context.” If that were the case then we would all be social constructivists and I really would be arguing with a straw person. But of course it isn’t, as I show over and over again in my book. It is, rather, the claim (made by her Cambridge colleague Andrew Cunningham) that science is “a human activity, wholly a human activity, and nothing but a human activity” — in other words that it is socially constituted, not merely socially influenced (the model for such an argument being, of course, Durkheim on religion). The consequence of this, constructivists rightly hold, is epistemological egalitarianism — any particular belief is to be regarded as being just as good as any other.

[3] Take for example William Donahue’s discussion of Galileo and the phases of Venus in Park and Daston, 585: “He argued... that this phenomenon was inconsistent with the Ptolemaic arrangement of the planets...” Galileo and his contemporaries understood perfectly well that Galileo had proved the Ptolemaic arrangements of the planets could not be right — the whole impact of Galileo’s discovery is lost by reducing it to a mere argument. Indeed Donahue does not acknowledge that it had any impact while I show the impact is measurable by counting editions of Sacrobosco.

[4] A colleague of mine unkindly calls this the Polo history: Polo Mints, to quote Wikipedia, “are a brand of mints whose defining feature is the hole in the middle.”

[5] The text is no longer on the Oxford University website, but can still be found, for example, at http://goo.gl/KOY05f (accessed 7 Dec 2015).

Friday, December 18, 2015

Thursday, December 03, 2015

Can science be made to work better?

Here is a longer version of the leader that I wrote for Nature this week.

_______________________________________________________________________

Suppose you’re seeking to develop a technique for transferring proteins from a gel to a plastic substrate for easier analysis. Useful, maybe – but will you gain much kudos for it? Will it enhance the reputation of your department? One of the sobering findings of last year’s survey of the 100 most cited papers on the Web of Science (Nature 514, 550; 2014) was how many of them reported such apparently mundane methodological research (this one was number six).

Not all prosaic work reaches such bibliometric heights, but that doesn’t deny its value. Overcoming the hurdles of nanoparticle drug delivery, for example, requires the painstaking characterization of pathways and rates of breakdown and loss in the body: work that is probably unpublishable, let alone unglamorous. One can cite comparable demands of detail for getting just about any bright idea to work in practice – but it’s the initial idea, not the hard grind, that garners the praise and citations.

An aversion to routine yet essential legwork seems at face value to be quite the opposite of the conclusions of a new study on how scientists pick their research topics. This analysis of discovery and innovation in biochemistry (A. Rzhetsky et al., Proc. Natl Acad. Sci. USA 112, 14569; 2015) finds that, in this field at least, choices of research problems are becoming more conservative and risk-averse. The results suggest that this trend over the past 30 years is quite the reverse of what is needed to make scientific discovery efficient.

But these problems – avoidance of both risk and drudge – are just opposite sides of the same coin. They reflect the fact that scientific norms, institutions and reward structures increasingly force researchers to aim at a “sweet spot” that will maximize their career prospects: work that is novel enough to be publishable but orthodox enough not to alarm or offend referees. That situation is surely driven in large degree by the importance attached to citation indices, as well as by the insistence of grant agencies that the short-term impact of the work can be defined in advance.

One might quibble with the necessarily crude measures of research strategy and knowledge generation employed in the PNAS study. But its general conclusion – that current norms discourage risk and therefore slow down scientific advance, and that the problem is worsening – ring true. It’s equally concerning that the incentives for boring but essential collection of fine-grained data to solve a specific problem are vanishing in a publish-or-perish culture.

A fashionably despairing cry of “Science is broken!” is not the way forward. The wider virtue of Rzhetsky et al.’s study is that it floats the notion of tuning practices and institutions to accelerate the process of scientific discovery. The researchers conclude, for example, that publication of experimental failures would assist this goal by avoiding wasteful repetition. Journals chasing impact factors might not welcome that, but they are no longer to sole repositories of scientific findings. Rzhetsky et al. also suggest some shifts in institutional structures that might help promote riskier but potentially more groundbreaking research – for example, spreading both risk and credit among teams or organizations, as used to be common at Bell Labs.

The danger is that efforts to streamline discovery simply become codified into another set of guidelines and procedures, creating yet more hoops that grant applicants have to jump through. If there’s one thing science needs less of, it is top-down management. A first step would be to recognize the message that research on complex systems has emphasized over the past decade or so: efficiencies are far more likely to come from the bottom up. The aim is to design systems with basic rules of engagement for participating agents that best enable an optimal state to emerge. Such principles typically confer adaptability, diversity, and robustness. There could be a wider mix of grant sources and sizes, say, less rigid disciplinary boundaries, and an acceptance that citation records are not the only measure of worth.

But perhaps more than anything, the current narrowing of objectives, opportunities and strategies in science reflects an erosion of trust. Obsessive focus on “impact” and regular scrutiny young (and not so young) researchers’ bibliometric data betray a lack of trust that would have sunk many discoveries and discoverers of the past. Bibliometrics might sometimes be hard to avoid as a first-pass filter for appointments (Nature 527, 279; 2015), but a steady stream of publications is not the only or even the best measure of potential.

Attempts to tackle these widely acknowledged problems are typically little more than a timid rearranging of deckchairs. Partly that’s because they are seen as someone else’s problem: the culprits are never the complainants, but the referees, grant agencies and tenure committees who oppress them. Yet oddly enough, these obstructive folk are, almost without exception, scientists too (or at least, once were).

It’s everyone’s problem. Given the global challenges that science now faces, inefficiencies can exact a huge price. It is time to get serious about oiling the gears.

_______________________________________________________________________

Suppose you’re seeking to develop a technique for transferring proteins from a gel to a plastic substrate for easier analysis. Useful, maybe – but will you gain much kudos for it? Will it enhance the reputation of your department? One of the sobering findings of last year’s survey of the 100 most cited papers on the Web of Science (Nature 514, 550; 2014) was how many of them reported such apparently mundane methodological research (this one was number six).

Not all prosaic work reaches such bibliometric heights, but that doesn’t deny its value. Overcoming the hurdles of nanoparticle drug delivery, for example, requires the painstaking characterization of pathways and rates of breakdown and loss in the body: work that is probably unpublishable, let alone unglamorous. One can cite comparable demands of detail for getting just about any bright idea to work in practice – but it’s the initial idea, not the hard grind, that garners the praise and citations.

An aversion to routine yet essential legwork seems at face value to be quite the opposite of the conclusions of a new study on how scientists pick their research topics. This analysis of discovery and innovation in biochemistry (A. Rzhetsky et al., Proc. Natl Acad. Sci. USA 112, 14569; 2015) finds that, in this field at least, choices of research problems are becoming more conservative and risk-averse. The results suggest that this trend over the past 30 years is quite the reverse of what is needed to make scientific discovery efficient.

But these problems – avoidance of both risk and drudge – are just opposite sides of the same coin. They reflect the fact that scientific norms, institutions and reward structures increasingly force researchers to aim at a “sweet spot” that will maximize their career prospects: work that is novel enough to be publishable but orthodox enough not to alarm or offend referees. That situation is surely driven in large degree by the importance attached to citation indices, as well as by the insistence of grant agencies that the short-term impact of the work can be defined in advance.

One might quibble with the necessarily crude measures of research strategy and knowledge generation employed in the PNAS study. But its general conclusion – that current norms discourage risk and therefore slow down scientific advance, and that the problem is worsening – ring true. It’s equally concerning that the incentives for boring but essential collection of fine-grained data to solve a specific problem are vanishing in a publish-or-perish culture.

A fashionably despairing cry of “Science is broken!” is not the way forward. The wider virtue of Rzhetsky et al.’s study is that it floats the notion of tuning practices and institutions to accelerate the process of scientific discovery. The researchers conclude, for example, that publication of experimental failures would assist this goal by avoiding wasteful repetition. Journals chasing impact factors might not welcome that, but they are no longer to sole repositories of scientific findings. Rzhetsky et al. also suggest some shifts in institutional structures that might help promote riskier but potentially more groundbreaking research – for example, spreading both risk and credit among teams or organizations, as used to be common at Bell Labs.

The danger is that efforts to streamline discovery simply become codified into another set of guidelines and procedures, creating yet more hoops that grant applicants have to jump through. If there’s one thing science needs less of, it is top-down management. A first step would be to recognize the message that research on complex systems has emphasized over the past decade or so: efficiencies are far more likely to come from the bottom up. The aim is to design systems with basic rules of engagement for participating agents that best enable an optimal state to emerge. Such principles typically confer adaptability, diversity, and robustness. There could be a wider mix of grant sources and sizes, say, less rigid disciplinary boundaries, and an acceptance that citation records are not the only measure of worth.

But perhaps more than anything, the current narrowing of objectives, opportunities and strategies in science reflects an erosion of trust. Obsessive focus on “impact” and regular scrutiny young (and not so young) researchers’ bibliometric data betray a lack of trust that would have sunk many discoveries and discoverers of the past. Bibliometrics might sometimes be hard to avoid as a first-pass filter for appointments (Nature 527, 279; 2015), but a steady stream of publications is not the only or even the best measure of potential.

Attempts to tackle these widely acknowledged problems are typically little more than a timid rearranging of deckchairs. Partly that’s because they are seen as someone else’s problem: the culprits are never the complainants, but the referees, grant agencies and tenure committees who oppress them. Yet oddly enough, these obstructive folk are, almost without exception, scientists too (or at least, once were).

It’s everyone’s problem. Given the global challenges that science now faces, inefficiencies can exact a huge price. It is time to get serious about oiling the gears.

Friday, October 16, 2015

The ethics of freelance reporting

There’s a very interesting post (if you’re a science writer) on journalistic ethics from Erik Vance here. I confess that I’ve been blissfully ignorant of this PR sideline that many science writers apparently have. It makes for a fairly clear division – either you’re writing PR or you’re not – but it doesn’t speak to my situation, and I can’t be alone in that. Erik worries about stories that come out of “institutionally sponsored trips”. I’m not entirely clear what he means by that, but I’m often in a situation like this:

A lab or department has asked if I might come and give a talk or take part in a seminar or some such. They’ll pay my expenses, including accommodation if necessary. And if I think it’ll be interesting, I’ll try to do it.

Is this then a junket? You see, what often happens is that the institute in question might line up a little programme of visits to researchers at the place in question, because I might find their work interesting or perhaps just because they would like to talk to me. And indeed I might well find their work interesting and want to write about it, or perhaps about the broader issues of the field they bring to my attention.

Now the question is: am I compromised by having the trip paid for me? Even more so on those rare occasions that I’m paid an honorarium? It’s for such reasons that Nature would always insist that the journal, not the visited institution, pays the way for its writers. This seems fair enough for a journal, but shouldn’t the same apply to a freelancer then?

I could say that life as a freelancer is already hard enough, given for example the more or less permanent freeze in pay rates, without our having to pay ourselves for any travelling that might produce a story (not least because you don’t always know that in advance). When a journal writer goes to give a talk or makes a lab visit, they are being paid by their employer to do it. As a freelancer, you are sacrificing working time to do that, and so are essentially already losing money by making the trip even if your travel and accommodation are covered.

But that doesn’t really answer the question, does it? It doesn’t mean that the piece you write is uncompromised just because you couldn’t afford to have gone if your expenses weren’t paid.

I don’t know what the answer is here. I do know that as a freelancer you’ll only get to write a piece if you pitch it to an editor who likes it, i.e. if it is a genuinely good story in the first place. In fact, you’ll probably only want to write it anyway if you sense it’s a good story yourself, and you do yourself no favours by pitching weak stories. But will your coverage be influenced by having been put up at a nice (if you’re lucky!) hotel by the institution? Erik is right to warn about unconscious biases, but I can’t easily see why the story would come out any different than if you’d come across the same work in a journal paper – you’d still be getting outside comment on it from objective specialists and so on. Still, I might be missing some important considerations here, and would be glad to have any pointed out to me.

It seems to me that a big part of this comes down to the attitude of the writer. If you start off from the position that you’re a cheerleader for science, you’re likely to be uncritical however you discover the story. If you consider yourself a critic in the proper sense, like a music or theatre critic, you’ll tend to look at the work accordingly. The same, it seems to me, has always applied to the issue of showing the authors a draft of the piece you’ve written about their work. Some journalists consider this an absolute no-no. I’ve never really understood why. If the scientist comes back pointing out technical errors in the piece, as they often do (and almost invariably in the nicest possible way), you get to give your readers a more accurate account. If they start demanding changes that seem unnecessary, interfering or pedantic, for example insisting that Professor Plum’s comments on their work are way off key, you just say sorry guys, this is the way it stays. That’s surely the job of a journalist. I can’t remember a time when feedback from authors on a rough draft was ever less than helpful and improving. So I guess I just don’t see what the problem is here.

But I am very conscious that I’ve never had any real training, as far as I can recall, in ethics in journalism. So I might be out of touch with what the issues are.

A lab or department has asked if I might come and give a talk or take part in a seminar or some such. They’ll pay my expenses, including accommodation if necessary. And if I think it’ll be interesting, I’ll try to do it.

Is this then a junket? You see, what often happens is that the institute in question might line up a little programme of visits to researchers at the place in question, because I might find their work interesting or perhaps just because they would like to talk to me. And indeed I might well find their work interesting and want to write about it, or perhaps about the broader issues of the field they bring to my attention.

Now the question is: am I compromised by having the trip paid for me? Even more so on those rare occasions that I’m paid an honorarium? It’s for such reasons that Nature would always insist that the journal, not the visited institution, pays the way for its writers. This seems fair enough for a journal, but shouldn’t the same apply to a freelancer then?

I could say that life as a freelancer is already hard enough, given for example the more or less permanent freeze in pay rates, without our having to pay ourselves for any travelling that might produce a story (not least because you don’t always know that in advance). When a journal writer goes to give a talk or makes a lab visit, they are being paid by their employer to do it. As a freelancer, you are sacrificing working time to do that, and so are essentially already losing money by making the trip even if your travel and accommodation are covered.

But that doesn’t really answer the question, does it? It doesn’t mean that the piece you write is uncompromised just because you couldn’t afford to have gone if your expenses weren’t paid.

I don’t know what the answer is here. I do know that as a freelancer you’ll only get to write a piece if you pitch it to an editor who likes it, i.e. if it is a genuinely good story in the first place. In fact, you’ll probably only want to write it anyway if you sense it’s a good story yourself, and you do yourself no favours by pitching weak stories. But will your coverage be influenced by having been put up at a nice (if you’re lucky!) hotel by the institution? Erik is right to warn about unconscious biases, but I can’t easily see why the story would come out any different than if you’d come across the same work in a journal paper – you’d still be getting outside comment on it from objective specialists and so on. Still, I might be missing some important considerations here, and would be glad to have any pointed out to me.

It seems to me that a big part of this comes down to the attitude of the writer. If you start off from the position that you’re a cheerleader for science, you’re likely to be uncritical however you discover the story. If you consider yourself a critic in the proper sense, like a music or theatre critic, you’ll tend to look at the work accordingly. The same, it seems to me, has always applied to the issue of showing the authors a draft of the piece you’ve written about their work. Some journalists consider this an absolute no-no. I’ve never really understood why. If the scientist comes back pointing out technical errors in the piece, as they often do (and almost invariably in the nicest possible way), you get to give your readers a more accurate account. If they start demanding changes that seem unnecessary, interfering or pedantic, for example insisting that Professor Plum’s comments on their work are way off key, you just say sorry guys, this is the way it stays. That’s surely the job of a journalist. I can’t remember a time when feedback from authors on a rough draft was ever less than helpful and improving. So I guess I just don’t see what the problem is here.

But I am very conscious that I’ve never had any real training, as far as I can recall, in ethics in journalism. So I might be out of touch with what the issues are.

Multiverse of Stone

This summer I went to one of the most extraordinary scientific gatherings I’ve ever attended. Where else would you find Martin Rees, Rolf Hauer, Carlos Frenk, Alex Vilenkin and Bernard Carr assembled to talk about the multiverse idea? The meeting was convened by architect and designer Charles Jencks to mark the opening of his remarkable new landscape, the Crawick Multiverse, in Dumfries on the Scottish borders. And the setting was no less striking: it took place in Drumlanrig Castle, a splendid baronial edifice that is the ancenstral home of the Duke of Buccleugh, whose generosity and hospitality made it probably the most congenial meeting I’ve ever been to. Representing the humanities were Mary-Jane Rubenstein, whose excellent book Worlds Without End (2014) places the multiverse in historical and theological perspective, Martin Kemp, who talked about spirals in nature (look out for Martin’s forthcoming book Structural Intuitions) and Michael Benson, whose Cosmigraphics (2014) shows how we have depicted and conceptualized the universe over time. I talked about pattern formation in nature.

Despite all this, the piece that I wrote about the event has not found a home, having fallen between too many stools in various potential forums. So I’ll put it here. You will also be able to download a pdf of this article from my website here, once some site reworking has been completed.

______________________________________________________________________

With the Crawick Multiverse, landscape architect and designer Charles Jencks has set the archaeologists of the future a delightful puzzle. They will spin theories of various degrees of fancifulness to explain why this earthwork was built in the rather beautiful but undeniably stark wilds of Dumfries and Galloway. Is there a cosmic significance in the alignment of the stone-flanked avenue? What do these twinned spiralling tumuli denote, these little crescent lagoons, these radial splashes of stone paving? Whence these cryptic inscriptions “Balanced Universe” and “PIC” on slabs and monoliths?

The Crawick Multiverse

If any futurist historian is on hand to explain, there are two ways in which her story might go. Either she will say that the monument marks the moment when ancient science awoke to the realization that, as every child now knows, ours is not the only universe but is merely one among the multiverse of worlds, all springing perpetually into existence in an expanding matrix of “false vacuum”, each with its unique laws of physics. Or she will explain (with a warning that we should not Whiggishly mock the seemingly odd and absurd ideas of the past) that the Crawick site was built at a time when scientists seriously entertained so peculiar and now obviously misguided a notion.

If only we could tell which way it will go! But right now, that’s anyone’s guess. Whatever the outcome, Jencks, the former theorist of postmodernism who today takes risks simultaneously intellectual, aesthetic, critical and financial in his efforts to represent scientific ideas about the cosmos at a herculean scale, has created an extraordinarily ambitious landscape that manages to blend Goldsworthy-style nature art with cutting-edge cosmology and more than a touch of what might be interpreted as New Age paganism. At the grand opening of the Crawick (pronounced “Croyck”) Multiverse in late June, no one seemed too worried if the science will stand up to scrutiny. Instead there were pipe bands, singing schoolchildren, performance art and generous blasts of Hibernian weather.

Jencks is no stranger to this kind of grand statement. His house at Portrack, near Dumfries and a 30-minute drive from Crawick, sits amidst the Garden of Cosmic Speculation, a landscape of undulating turf terraces, stones, water pools and ornate metal sculptures that represents all manner of scientific ideas, from the spacetime-bending antics of black holes and the helical forms of DNA to mathematical fractals and the “symmetry-breaking” shifts that produced structure and order in the early universe. Jencks opens the garden to the public for one day each year to raise funds for Maggie’s Centres, the drop-in centres for cancer patients that Jencks established after the death of his wife Maggie Keswick Jencks from cancer in 1995.

A panorama of Charles Jencks’ Garden of Cosmic Speculation at Portrack House, Dumfries. (Photo: Michael Benson.)

Jencks also designed the lawn that fronts the Scottish National Gallery of Modern Art in Edinburgh, a series of crescent-shaped stepped mounds and pools inspired by chaos theory and “the way nature organizes itself”, in Jencks’ words. By drawing on cutting-edge scientific ideas, Jencks has cultivated strong ties with scientists themselves, and a plan for a landscape at the European particle-physics centre of CERN, near Geneva, sits on the shelf, awaiting funding.

Charles Jencks’ science-inspired land art in the Garden of Cosmic Speculation (top) and the garden of the Scottish National Gallery of Modern Art in Edinburgh (bottom).

The Multiverse project began when the Duke of Buccleuch and Queensberry, whose ancestral home at Drumlanrig Castle stands near to Crawick, asked Jencks to reclaim the site, dramatically surrounded by rolling hills but disfigured by the slag heaps from open-cast coal mining. When work began in 2012, the excavations unearthed thousands of boulders half-buried in the ground, which Jencks has used to create a panorama of standing stones and sculpted tumuli.

“As we discovered more and more rocks, we laid out the four cardinal points, made the north-south axis the primary one, and thereby framed both the far horizons and the daily and monthly movements of the sun”, Jencks says. “One theory of pre-history is that stone circles frame the far hills and key points, and while I wanted to capture today’s cosmology not yesterday’s, I was aware of this long landscape tradition.”

Visitors to the site should recognize the spiral form of our own Milky Way Galaxy, Jencks says – but the layout invites them to delve deeper into cosmogenic origins. The Milky Way, he says, “emerged from our Local Group of galaxies, but where did they come from? From the supercluster of galaxies, and where did they come from? From the largest structures in the universe, the web of filaments? And so on and on.” Ultimately this leads to the questions confronted by theories of the Big Bang in which our own universe is thought to have formed – and to questions about whether this cosmic outburst, or others, might also have spawned other universes, or a multiverse.

How many universes do you need?

A decade or two ago, allusions to the notion that there are many – perhaps infinitely many – universes would have been regarded as dabbling on the fringes of respectable science. Now the multiverse idea is embraced by many leading cosmologists and other physicists. That’s not because we have any evidence for it, but because it seems to offer a simultaneous resolution to several outstanding problems on the wild frontier where fundamental physics – the science of the immeasurably small – blends with cosmology, which attempts to explain the origin and evolution of all the vastness of space.

“In the last twenty years the multiverse has developed from an exotic speculation into a full-blown theory”, says Jencks. “From avant-garde conjecture held by the few to serious hypothesis entertained by the many, leading thinkers now believe the multiverse is a plausible description of an ensemble of universes.”

To explore how the multiverse came in from the cold, Jencks convened a gathering of cosmologists and particle physicists whose eminence would rival the finest of international science conventions. While the opening celebrations braved the elements at Crawick, the scientists were hosted by the duke at Drumlanrig Castle – perhaps the most stunning example of French-inflected Scottish baronial architecture, fashioned from the gorgeous red stone of Dumfries. In one long afternoon while the sun conveyed its rare blessing on the jaw-dropping gardens outside, these luminaries explained to an invited audience why they have come to suppose a multiplicity of universes beyond all reasonable measure: why an understanding of the deepest physical laws is compelling us to make the position of humanity in the cosmos about as insignificant as it could possibly be.

Drumlanrig Castle near Dumfries, where scientists convened to discuss the multiverse.

It was a decidedly surreal gathering, with Powerpoint presentations on complex physics amidst Louis XIV furniture, while massive portraits of the duke’s illustrious ancestors (including Charles II’s unruly illegitimate son the 1st Duke of Monmouth) looked on. When art historian Martin Kemp, opening the proceedings with a survey of spiral patterns, discussed the nature art of Andy Goldsworthy, only to have the artist himself pop up to explain his intentions, one had to wonder if we had already strayed into some parallel universe.

Martin Rees, Astronomer Royal and past President of the Royal Society, suggested that the multiverse theory represents a “fourth Copernican revolution”: the fourth time since Copernicus shoved the earth away from the centre of creation that we have been forced to downgrade our status in the heavens. Yet curiously, this latest perspective also gives our very existence a central role in any explanation of why the basic laws of nature are the way they are.

Here’s the problem. A quest for the much-vaunted Theory of Everything – a set of “simple” laws, or perhaps just a single equation, from which all the other principles of physics can be derived, and which will achieve the much-sought reconciliation of gravity and quantum theory – has landed us in the perplexing situation of having more alternatives to choose from than there are fundamental particles in the known universe. To be precise, the latest version of string theory, which many physicists who delve into these waters insist is the best candidate for a “final theory”, offers 10**500 (1 followed by 500 zeros) distinct solutions: that many possible variations on the laws of physics, with no obvious reason to prefer one over any other. Some are tempted to conclude that this is the fault of string theory, not of the universe, and so prefer to ditch the whole edifice, which without doubt is built on some debatable assumptions and remains far beyond our means to test directly for the foreseeable future.

If that were all there was to it, you might well wonder if indeed we should be wiping the board clean and starting again. But cosmology now suggests that this crazy proliferation of physical laws can be put to good use. The standard picture of the Big Bang – albeit not the one that all physicists embrace – posits that, a fraction of a second after the universe began to expand from its mysterious origin, it underwent a fleeting instant of expansion at an enormous rate, far faster than the speed of light, called inflation. This idea explains, in what might seem like but is not a paradox, both why the universe is so uniform everywhere we look and why it is not perfectly so. Inflation blew up the “fireball” to a cosmic scale before it had a chance to get too clumpy.

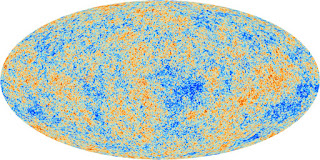

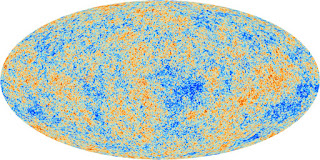

That primordial state would, however, have been unavoidably ruffled by the tiny chance variations that quantum physics creates. These fluctuations are now preserved at astronomical scales in slight differences in temperature of the cosmic microwave background radiation, the faint afterglow of the Big Bang itself that several satellite-based telescopes have now mapped out in fine detail. As astrophysicist Carlos Frenk explained at Drumlanrig, the match between the spectrum of temperature variations – their size at different distance scales – predicted by inflationary theory and that measured is so good that, were it not so well attested in so huge an international effort, it would probably arouse suspicions of data-rigging.

The temperature variations of the cosmic microwave background, as mapped by the European Space Agency’s Planck space telescope in 2013. The tiny variations correspond to regions of slightly different density in the very early universe that seeded the formation of clumps of matter – galaxies and stars – today.

What has this got to do with multiverses? Well, to put it one way: if you have a theory for how the Big Bang happened as a natural phenomenon, almost by definition you no longer have reason to regard it as a one-off event. The current view is that the Big Bang itself was a kind of condensation of energy-filled empty space – the “true vacuum” – out of an unstable medium called the “false vacuum”, much as mist condenses from the moist air of the Scottish hills. But this false vacuum, for reasons I won’t attempt to explain, should also be subject to a kind of inflation in which it expands at fantastic speed. Then our universe appears as a sort of growing “bubble” in the false vacuum. But others do too: not just 13.6 billion years ago (the age of our universe) but constantly. It’s a scenario called “eternal inflation”, as one of its pioneers, cosmologist Alex Vilenkin, explained at the meeting. In this view, there are many, perhaps infinitely many, universes appearing and growing all the time.

The reason this helps with string theory is that it relieves us of the need to select any one of the 10**500 solutions it yields. There are enough homes for all versions. That’s not just a matter of accommodating homeless solutions to an equation. One of the most puzzling questions of modern cosmology is why the vacuum is not stuffed full of unimaginable amounts of energy. Quantum theory predicts that empty space should be so full of particles popping in and out of existence all the time, just because they can, that it should hold far more energy than the interior of a star. Evidently it doesn’t, and for a long time it was simply assumed that some unknown effect must totally purge the vacuum of all this energy. But the discovery of dark energy in the late 1990s – which manifests itself as an acceleration of the expansion of our universe – forced cosmologists to accept that a tiny amount of that vacuum energy does in fact remain. In this view, that’s precisely what dark energy is. Yet it is so tiny an amount – 10**-122 of what is predicted – that it seems almost a cosmic joke that the cancellation should be so nearly complete but not quite.

But if there is a multiverse, this puzzle of “fine-tuning” goes away. We just happen to be living in one of the universes in which the laws of nature are, out of all the versions permitted by string theory, set up this way. Doesn’t that seem too much of an extraordinary good fortune? Well no, because without this near cancellation of the vacuum energy, atoms could not exist, and so neither could ordinary matter, stars – or us. In any universe in which these conditions pertain, intelligent beings might be scratching their heads over this piece of apparent providence. In those – far more numerous – where that’s not the case, there is no one to lament it.

The pieces of the puzzle, bringing together the latest ideas in cosmology and fundamental physics, seem suddenly to dovetail rather neatly. Too neatly for some, who say that such arguments are metaphysical sleight of hand – a kind of cheating in which we rescue ourselves from theoretical problems not by solving them but by dressing them up as their own solution. How can we test these assertions, they ask? And isn’t it defeatist to accept that there’s ultimately no fundamental reason why the fundamental constant of nature have the values they do, because in other universes they don’t?

But there’s no denying that, without the multiverse, the “fine-tuning” problem of dark energy alone looks tailor-made for a theologian’s “argument by design”. If you don’t want a God, astrophysicist Bernard Carr has quipped (only half-jokingly), you’d better have a multiverse. It’s not the first time a “plurality of worlds” has sparked theological debate, as philosopher of religion Mary-Jane Rubenstein reminded the Drumlanrig gathering – his interpretation (albeit not simply his assertion) of such a multiplicity was partly what got the Dominican friar Giordano Bruno burnt at the stake in 1600.

Do these questions drift beyond science into metaphysics? Perhaps – but why should we worry about that, Carr asked the meeting? At the very least, if true science must be testable, who is to say on what timescale it must happen? (The current realistic possibilities at CERN are certainly more modest, as its Director General Rolf Heuer explained – but even they don’t exclude an exploration of other types of multiverse ideas, such as a search for the mini-black holes predicted by some theories that invoke extra, “invisible” dimensions of space beyond our familiar three.)

Reclaiming the multiverse

How much of all this finds its way into Jencks’ Crawick Multiverse is another matter. In line with his thinking about the hierarchy of “cosmic patterns” through which we trace our place in the cosmos, many of the structures depict our immediate environment. Two corkscrew hillocks represent the Milky Way galaxy and its neighbour Andromeda, while the local “supercluster” of galaxies becomes a gaggle of rock-paved artificial drumlins. The Sun Amphitheatre, which can house 5,000 people (though it’s a brave soul who organizes outdoor performances on a Scottish hillside at any time of year), is designed to depict the crescent shapes of a solar eclipse. The Multiverse itself is a mound up which mudstone slabs trace a spiral path, some of them carved to symbolize the different kinds of universe the theory predicts.

The local universe represented in the Crawick Multiverse.

But why create a Multiverse on a Scottish hillside anyway? Because, Jencks says, “it is our metaphysics, or at least is fast becoming so. And all art aspires to the condition of its present metaphysics. That’s so true today, in the golden age of cosmology, when the boundaries of truth, nature, and culture are being rewritten and people are again wondering in creative ways about the big issues.” “I wanted to confront the basic question which so many cosmologists raise: why is our universe so well-balanced, and in so many ways? What does the apparent fine-tuning mean, how can we express it, make it comprehensible, palpable?”

“Apart from all this”, he adds, “if you have a 55-acre site, and almost half the available money has to go into decontamination alone, then you’d better have a big idea for 2000 free boulders.”

Charles Jencks introduces his multiverse. (Photo: Michael Benson.)

The sculptures and forms of the Crawick Multiverse reflect Jencks’ own unique and sometimes impressionistic take on the theories. For example, he prefers to replace “anthropic” reasoning that uses our own observation of the observable universe as an explanation of apparent contingencies with the notion that this universe (at least) has a tendency to spawn ever more complexity: his Principle of Increasing Complexity (PIC). He is critical of some of science’s “Pentagon metaphors – wimps and machos (candidates for the mysterious dark matter that exceeds the amount of ordinary visible matter by a factor of around four), selfish genes and so on. “The universe did not start in a big bang”, Jencks says. “It was smaller than a quark, and noise wasn’t its most significant quality.” He prefers the term “Hot Stretch”.

But his intention isn’t really pedagogical – it’s about giving some meaning to this former site of mining-induced desolation. “I hope to achieve, first, something for the economically depressed coal-mining towns in the area”, Jencks says. “Richard [Buccleuch] had an obligation to make good the desolation, and he feels this responsibility strongly. I wanted to create something that related to this local culture. Like Arte Povera it makes use of what is to hand: virtually everything comes from the site, or three miles away. Second, I was keen on getting an annual festival based on local culture – the pipers in the area, the Riding of the Marches, the performing artists, the schools.”

Visitors to the site seem likely to be offered only the briefest of introductions to the underlying cosmic themes. That’s probably as it should be, not only because the theories are so provisional (they’ll surely look quite different in 20 years time, when the earthworks have had a chance to bed themselves into the landscape) but because, just like the medieval cosmos encoded in the Gothic cathedrals, this sort of architecture is primarily symbolic. It will speak to us not like a lecture, but through what Martin Kemp has called “structural intuitions”, an innate familiarity with the patterns of the natural world. Some scientists might look askance at any suggestion that the Crawick Multiverse can be seen as a sacred place. But it’s hard to imagine how even the most secular of them, if they really take the inflationary multiverse seriously, could fail to find within it some of the awe that a peasant from the wheatfields of the Beauce must have experienced on entering the nave of Chartres Cathedral – a representation in stone of the medieval concept of an orderly Platonic universe – and stepping into its cosmic labyrinth.

Despite all this, the piece that I wrote about the event has not found a home, having fallen between too many stools in various potential forums. So I’ll put it here. You will also be able to download a pdf of this article from my website here, once some site reworking has been completed.

______________________________________________________________________

With the Crawick Multiverse, landscape architect and designer Charles Jencks has set the archaeologists of the future a delightful puzzle. They will spin theories of various degrees of fancifulness to explain why this earthwork was built in the rather beautiful but undeniably stark wilds of Dumfries and Galloway. Is there a cosmic significance in the alignment of the stone-flanked avenue? What do these twinned spiralling tumuli denote, these little crescent lagoons, these radial splashes of stone paving? Whence these cryptic inscriptions “Balanced Universe” and “PIC” on slabs and monoliths?

The Crawick Multiverse

If any futurist historian is on hand to explain, there are two ways in which her story might go. Either she will say that the monument marks the moment when ancient science awoke to the realization that, as every child now knows, ours is not the only universe but is merely one among the multiverse of worlds, all springing perpetually into existence in an expanding matrix of “false vacuum”, each with its unique laws of physics. Or she will explain (with a warning that we should not Whiggishly mock the seemingly odd and absurd ideas of the past) that the Crawick site was built at a time when scientists seriously entertained so peculiar and now obviously misguided a notion.

If only we could tell which way it will go! But right now, that’s anyone’s guess. Whatever the outcome, Jencks, the former theorist of postmodernism who today takes risks simultaneously intellectual, aesthetic, critical and financial in his efforts to represent scientific ideas about the cosmos at a herculean scale, has created an extraordinarily ambitious landscape that manages to blend Goldsworthy-style nature art with cutting-edge cosmology and more than a touch of what might be interpreted as New Age paganism. At the grand opening of the Crawick (pronounced “Croyck”) Multiverse in late June, no one seemed too worried if the science will stand up to scrutiny. Instead there were pipe bands, singing schoolchildren, performance art and generous blasts of Hibernian weather.

Jencks is no stranger to this kind of grand statement. His house at Portrack, near Dumfries and a 30-minute drive from Crawick, sits amidst the Garden of Cosmic Speculation, a landscape of undulating turf terraces, stones, water pools and ornate metal sculptures that represents all manner of scientific ideas, from the spacetime-bending antics of black holes and the helical forms of DNA to mathematical fractals and the “symmetry-breaking” shifts that produced structure and order in the early universe. Jencks opens the garden to the public for one day each year to raise funds for Maggie’s Centres, the drop-in centres for cancer patients that Jencks established after the death of his wife Maggie Keswick Jencks from cancer in 1995.

A panorama of Charles Jencks’ Garden of Cosmic Speculation at Portrack House, Dumfries. (Photo: Michael Benson.)

Jencks also designed the lawn that fronts the Scottish National Gallery of Modern Art in Edinburgh, a series of crescent-shaped stepped mounds and pools inspired by chaos theory and “the way nature organizes itself”, in Jencks’ words. By drawing on cutting-edge scientific ideas, Jencks has cultivated strong ties with scientists themselves, and a plan for a landscape at the European particle-physics centre of CERN, near Geneva, sits on the shelf, awaiting funding.

Charles Jencks’ science-inspired land art in the Garden of Cosmic Speculation (top) and the garden of the Scottish National Gallery of Modern Art in Edinburgh (bottom).

The Multiverse project began when the Duke of Buccleuch and Queensberry, whose ancestral home at Drumlanrig Castle stands near to Crawick, asked Jencks to reclaim the site, dramatically surrounded by rolling hills but disfigured by the slag heaps from open-cast coal mining. When work began in 2012, the excavations unearthed thousands of boulders half-buried in the ground, which Jencks has used to create a panorama of standing stones and sculpted tumuli.

“As we discovered more and more rocks, we laid out the four cardinal points, made the north-south axis the primary one, and thereby framed both the far horizons and the daily and monthly movements of the sun”, Jencks says. “One theory of pre-history is that stone circles frame the far hills and key points, and while I wanted to capture today’s cosmology not yesterday’s, I was aware of this long landscape tradition.”

Visitors to the site should recognize the spiral form of our own Milky Way Galaxy, Jencks says – but the layout invites them to delve deeper into cosmogenic origins. The Milky Way, he says, “emerged from our Local Group of galaxies, but where did they come from? From the supercluster of galaxies, and where did they come from? From the largest structures in the universe, the web of filaments? And so on and on.” Ultimately this leads to the questions confronted by theories of the Big Bang in which our own universe is thought to have formed – and to questions about whether this cosmic outburst, or others, might also have spawned other universes, or a multiverse.

How many universes do you need?

A decade or two ago, allusions to the notion that there are many – perhaps infinitely many – universes would have been regarded as dabbling on the fringes of respectable science. Now the multiverse idea is embraced by many leading cosmologists and other physicists. That’s not because we have any evidence for it, but because it seems to offer a simultaneous resolution to several outstanding problems on the wild frontier where fundamental physics – the science of the immeasurably small – blends with cosmology, which attempts to explain the origin and evolution of all the vastness of space.

“In the last twenty years the multiverse has developed from an exotic speculation into a full-blown theory”, says Jencks. “From avant-garde conjecture held by the few to serious hypothesis entertained by the many, leading thinkers now believe the multiverse is a plausible description of an ensemble of universes.”

To explore how the multiverse came in from the cold, Jencks convened a gathering of cosmologists and particle physicists whose eminence would rival the finest of international science conventions. While the opening celebrations braved the elements at Crawick, the scientists were hosted by the duke at Drumlanrig Castle – perhaps the most stunning example of French-inflected Scottish baronial architecture, fashioned from the gorgeous red stone of Dumfries. In one long afternoon while the sun conveyed its rare blessing on the jaw-dropping gardens outside, these luminaries explained to an invited audience why they have come to suppose a multiplicity of universes beyond all reasonable measure: why an understanding of the deepest physical laws is compelling us to make the position of humanity in the cosmos about as insignificant as it could possibly be.

Drumlanrig Castle near Dumfries, where scientists convened to discuss the multiverse.

It was a decidedly surreal gathering, with Powerpoint presentations on complex physics amidst Louis XIV furniture, while massive portraits of the duke’s illustrious ancestors (including Charles II’s unruly illegitimate son the 1st Duke of Monmouth) looked on. When art historian Martin Kemp, opening the proceedings with a survey of spiral patterns, discussed the nature art of Andy Goldsworthy, only to have the artist himself pop up to explain his intentions, one had to wonder if we had already strayed into some parallel universe.

Martin Rees, Astronomer Royal and past President of the Royal Society, suggested that the multiverse theory represents a “fourth Copernican revolution”: the fourth time since Copernicus shoved the earth away from the centre of creation that we have been forced to downgrade our status in the heavens. Yet curiously, this latest perspective also gives our very existence a central role in any explanation of why the basic laws of nature are the way they are.

Here’s the problem. A quest for the much-vaunted Theory of Everything – a set of “simple” laws, or perhaps just a single equation, from which all the other principles of physics can be derived, and which will achieve the much-sought reconciliation of gravity and quantum theory – has landed us in the perplexing situation of having more alternatives to choose from than there are fundamental particles in the known universe. To be precise, the latest version of string theory, which many physicists who delve into these waters insist is the best candidate for a “final theory”, offers 10**500 (1 followed by 500 zeros) distinct solutions: that many possible variations on the laws of physics, with no obvious reason to prefer one over any other. Some are tempted to conclude that this is the fault of string theory, not of the universe, and so prefer to ditch the whole edifice, which without doubt is built on some debatable assumptions and remains far beyond our means to test directly for the foreseeable future.

If that were all there was to it, you might well wonder if indeed we should be wiping the board clean and starting again. But cosmology now suggests that this crazy proliferation of physical laws can be put to good use. The standard picture of the Big Bang – albeit not the one that all physicists embrace – posits that, a fraction of a second after the universe began to expand from its mysterious origin, it underwent a fleeting instant of expansion at an enormous rate, far faster than the speed of light, called inflation. This idea explains, in what might seem like but is not a paradox, both why the universe is so uniform everywhere we look and why it is not perfectly so. Inflation blew up the “fireball” to a cosmic scale before it had a chance to get too clumpy.

That primordial state would, however, have been unavoidably ruffled by the tiny chance variations that quantum physics creates. These fluctuations are now preserved at astronomical scales in slight differences in temperature of the cosmic microwave background radiation, the faint afterglow of the Big Bang itself that several satellite-based telescopes have now mapped out in fine detail. As astrophysicist Carlos Frenk explained at Drumlanrig, the match between the spectrum of temperature variations – their size at different distance scales – predicted by inflationary theory and that measured is so good that, were it not so well attested in so huge an international effort, it would probably arouse suspicions of data-rigging.

The temperature variations of the cosmic microwave background, as mapped by the European Space Agency’s Planck space telescope in 2013. The tiny variations correspond to regions of slightly different density in the very early universe that seeded the formation of clumps of matter – galaxies and stars – today.

What has this got to do with multiverses? Well, to put it one way: if you have a theory for how the Big Bang happened as a natural phenomenon, almost by definition you no longer have reason to regard it as a one-off event. The current view is that the Big Bang itself was a kind of condensation of energy-filled empty space – the “true vacuum” – out of an unstable medium called the “false vacuum”, much as mist condenses from the moist air of the Scottish hills. But this false vacuum, for reasons I won’t attempt to explain, should also be subject to a kind of inflation in which it expands at fantastic speed. Then our universe appears as a sort of growing “bubble” in the false vacuum. But others do too: not just 13.6 billion years ago (the age of our universe) but constantly. It’s a scenario called “eternal inflation”, as one of its pioneers, cosmologist Alex Vilenkin, explained at the meeting. In this view, there are many, perhaps infinitely many, universes appearing and growing all the time.

The reason this helps with string theory is that it relieves us of the need to select any one of the 10**500 solutions it yields. There are enough homes for all versions. That’s not just a matter of accommodating homeless solutions to an equation. One of the most puzzling questions of modern cosmology is why the vacuum is not stuffed full of unimaginable amounts of energy. Quantum theory predicts that empty space should be so full of particles popping in and out of existence all the time, just because they can, that it should hold far more energy than the interior of a star. Evidently it doesn’t, and for a long time it was simply assumed that some unknown effect must totally purge the vacuum of all this energy. But the discovery of dark energy in the late 1990s – which manifests itself as an acceleration of the expansion of our universe – forced cosmologists to accept that a tiny amount of that vacuum energy does in fact remain. In this view, that’s precisely what dark energy is. Yet it is so tiny an amount – 10**-122 of what is predicted – that it seems almost a cosmic joke that the cancellation should be so nearly complete but not quite.

But if there is a multiverse, this puzzle of “fine-tuning” goes away. We just happen to be living in one of the universes in which the laws of nature are, out of all the versions permitted by string theory, set up this way. Doesn’t that seem too much of an extraordinary good fortune? Well no, because without this near cancellation of the vacuum energy, atoms could not exist, and so neither could ordinary matter, stars – or us. In any universe in which these conditions pertain, intelligent beings might be scratching their heads over this piece of apparent providence. In those – far more numerous – where that’s not the case, there is no one to lament it.