I’ve a short review online with Prospect of The Future of the Brain (Princeton University Press), edited by Gary Marcus & Jeremy Freeman. Here’s a slightly longer version. But I will say more about the book and topic in my Prospect blog soon.

_________________________________________________________________________

If you want a breezy, whistle-stop tour of the latest brain science, look elsewhere. But if you’re up for chunky, rather technical expositions by real experts, this book repays the effort. The message lies in the very (and sometimes bewildering) diversity of the contributions: despite its dazzling array of methods to study the brain, from fMRI to genetic techniques for labeling and activating individual neurons, this is still a primitive field largely devoid of conceptual and theoretical frameworks. As the editors put it, “Where some organs make sense almost immediately once we understand their constituent parts, the brain’s operating principles continue to elude us.”

Among the stimulating ideas on offer is neuroscientist Anthony Zador’s suggestion that the brain might lack unifying principles, but merely gets the job done with a makeshift “bag of tricks”. There’s fodder too for sociologists of science: several contributions evince the spirit of current projects that aim to amass dizzying amounts of data about how neurons are connected, seemingly in the blind hope that insight will fall out of the maps once they are detailed enough.

All the more reason, then, for the skeptical voices reminding us that “data analysis isn’t theory”, that current neuroscience is “a collection of facts rather than ideas”, and that we don’t even know what kind of computer the brain is. All the same, the “future” of the title might be astonishing: will “neural dust” scattered through the brain record all our thoughts? And would you want that uploaded to the Cloud?

Thursday, December 18, 2014

Wednesday, December 17, 2014

The restoration of Chartres: second thoughts

Several people have asked me what I think about the “restoration” of Chartres Cathedral, in the light of the recent piece by Martin Filler for the New York Review of Books. (Here, in case you’re wondering, is why anyone should wish to solicit my humble opinion on the matter.) I have commented on this before here, but the more I hear about the work, the less sanguine I feel. Filler makes some good arguments against, the most salient, I think, being the fact that this contravenes normal conservation protocols: the usual approach now, especially for paintings, is to do what we can to rectify damage (such as reattaching flakes of paint) but otherwise to leave alone. In my earlier blog I mentioned the case of York Cathedral, where masons actively replace old, crumbling masonry with new – but this is a necessary affair to preserve the integrity of the building, whereas slapping on a lick of paint isn’t. And the faux marble on the columns looks particularly hideous and unnecessary. To judge from the photos, the restoration looks far more tacky than I had anticipated.

It is perhaps not ideal that visitors to Chartres come away thinking that the wonderful, stark gloom is what worshippers in the Middle Ages would have experienced too. But it seems unlikely that the new paint job is going to get anyone closer to an authentic experience. Worse, it’s the kind of thing that, once done, is very hard to undo. It’s good to recognize that the reverence with which we generally treat the fabric of old buildings now is very different from the attitudes of earlier times – bishops would demand that structures be knocked down when they looked too old-fashioned and replaced with something à la mode, and during the nineteenth-century Gothic revival architects like Eugène Viollet-le-Duc would take all kinds of liberties with their “restorations”. But this is no reason why we should act the same way. So while there is still a part of me that is intrigued by the thought of being able to see the interior of Chartres in something close to its original state, I have come round to thinking that the cathedral should have been left alone.

Monday, December 15, 2014

Beyond the crystal

Here’s my Material Witness column from the November issue of Nature Materials (13, 1003; 2014).

___________________________________________________________________________

The International Year of Crystallography has understandably been a celebration of order. From Rene-Just Haüy’s prescient drawings of stacked cubes to the convolutions of membrane proteins, Nature’s Milestones in Crystallography revealed a discipline able to tackle increasingly complex and subtle forms of atomic-scale regularity. But it seems fitting, as the year draws to a close, to recognize that the road ahead is far less tidy. Whether it is the introduction of defects to control semiconductor band structure [1], the nanoscale disorder that can improve the performance of thermoelectric materials [2], or the creation of nanoscale conduction pathways in graphene [3], the future of solid-state materials physics seems increasingly to depend on a delicate balance of crystallinity and its violation. In biology, the notion of “structure” has always been less congruent with periodicity, but ever since Schrödinger’s famous “aperiodic crystal” there has been a recognition that a deeper order may underpin the apparent molecular turmoil of life.

The decision to redefine crystallinity to encompass the not-quite-regularity of quasicrystals is, then, just the tip of the iceberg when it comes to widening the scope of crystallography. Even before quasicrystals were discovered, Ruelle asked if there might exist “turbulent crystals” without long-ranged order, exhibiting fuzzy diffraction peaks [4]. The goal of “generalizing” crystallography beyond its regular domain has been pursued most energetically by Mackay [5], who anticipated the link between quasicrystals and quasiperiodic tilings [6]. More recently, Cartwright and Mackay have suggested that structures such as crystals might be best characterized not by their degree of order as such but by the algorithmic complexity of the process by which they are made – making generalized crystallography an information science [7]. As Mackay proposed, “a crystal is a structure the description of which is much smaller than the structure itself, and this view leads to the consideration of structures as carriers of information and on to wider concerns with growth, form, morphogenesis, and life itself” [5].

These ideas have now been developed by Varn and Crutchfield to provide what they call an information-theoretic measure for describing materials structure [8]. Their aim is to devise a formal tool for characterizing the hitherto somewhat hazy notion of disorder in materials, thereby providing a framework that can encompass anything from perfect crystals to totally amorphous materials, all within a rubric of “chaotic crystallography”.

Their approach is again algorithmic. They introduce the concept of “ε-machines”, which are minimal operations that transform one state into another [9]: for example, one ε-machine can represent the appearance of a random growth fault. Varn and Crutchfield present nine ε-machines relevant to crystallography, and show how their operation to generate a particular structure is a kind of computation that can be assigned a Shannon entropy, like more familiar computations involving symbolic manipulations. Any particular structure or arrangement of components can then be specified in terms of an initially periodic arrangement of components and the amount of ε-machine computation needed to generate from it the structure in question. The authors demonstrate how, for a simple one-dimensional case, diffraction data can be inverted to reconstruct the ε-machine that describes the disordered material structure.

Quite how this will play out in classifying and distinguishing real materials structures remains to be seen. But it surely underscores the point made by D’Arcy Thompson, the pioneer of morphogenesis, in 1917: “Everything is what it is because it got that way” [10].

1. Seebauer, E. G. & Noh, K. W. Mater. Sci. Eng. Rep. 70, 151-168 (2010).

2. Snyder, G. J. & Toberer, E. S. Nat. Mater. 7, 105-114 (2008).

3. Lahiri, J., Lin, Y., Bozkurt, P., Oleynik, I. I. & Batzill, M., Nat. Nanotech. 5, 326-329 (2010).

4. Ruelle, D. Physica A 113, 619-623 (1982).

5. Mackay, A. L. Struct. Chem. 13, 215-219 (2002).

6. Mackay, A. L. Physica A 114, 609-613 (1982).

7. Cartwright, J. H. E. & Macaky, A. L., Phil. Trans. R. Soc. A 370, 2807-2822 (2012).

8. Varn, D. P. & Crutchfield, J. P., preprint http://www.arxiv.org/abs/1409.5930 (2014).

9. Crutchfield, J. P., Nat. Phys. 8, 17-24 (2012).

10. Thompson, D’A. W., On Growth and Form. Cambridge University Press, Cambridge, 1917.

___________________________________________________________________________

The International Year of Crystallography has understandably been a celebration of order. From Rene-Just Haüy’s prescient drawings of stacked cubes to the convolutions of membrane proteins, Nature’s Milestones in Crystallography revealed a discipline able to tackle increasingly complex and subtle forms of atomic-scale regularity. But it seems fitting, as the year draws to a close, to recognize that the road ahead is far less tidy. Whether it is the introduction of defects to control semiconductor band structure [1], the nanoscale disorder that can improve the performance of thermoelectric materials [2], or the creation of nanoscale conduction pathways in graphene [3], the future of solid-state materials physics seems increasingly to depend on a delicate balance of crystallinity and its violation. In biology, the notion of “structure” has always been less congruent with periodicity, but ever since Schrödinger’s famous “aperiodic crystal” there has been a recognition that a deeper order may underpin the apparent molecular turmoil of life.

The decision to redefine crystallinity to encompass the not-quite-regularity of quasicrystals is, then, just the tip of the iceberg when it comes to widening the scope of crystallography. Even before quasicrystals were discovered, Ruelle asked if there might exist “turbulent crystals” without long-ranged order, exhibiting fuzzy diffraction peaks [4]. The goal of “generalizing” crystallography beyond its regular domain has been pursued most energetically by Mackay [5], who anticipated the link between quasicrystals and quasiperiodic tilings [6]. More recently, Cartwright and Mackay have suggested that structures such as crystals might be best characterized not by their degree of order as such but by the algorithmic complexity of the process by which they are made – making generalized crystallography an information science [7]. As Mackay proposed, “a crystal is a structure the description of which is much smaller than the structure itself, and this view leads to the consideration of structures as carriers of information and on to wider concerns with growth, form, morphogenesis, and life itself” [5].

These ideas have now been developed by Varn and Crutchfield to provide what they call an information-theoretic measure for describing materials structure [8]. Their aim is to devise a formal tool for characterizing the hitherto somewhat hazy notion of disorder in materials, thereby providing a framework that can encompass anything from perfect crystals to totally amorphous materials, all within a rubric of “chaotic crystallography”.

Their approach is again algorithmic. They introduce the concept of “ε-machines”, which are minimal operations that transform one state into another [9]: for example, one ε-machine can represent the appearance of a random growth fault. Varn and Crutchfield present nine ε-machines relevant to crystallography, and show how their operation to generate a particular structure is a kind of computation that can be assigned a Shannon entropy, like more familiar computations involving symbolic manipulations. Any particular structure or arrangement of components can then be specified in terms of an initially periodic arrangement of components and the amount of ε-machine computation needed to generate from it the structure in question. The authors demonstrate how, for a simple one-dimensional case, diffraction data can be inverted to reconstruct the ε-machine that describes the disordered material structure.

Quite how this will play out in classifying and distinguishing real materials structures remains to be seen. But it surely underscores the point made by D’Arcy Thompson, the pioneer of morphogenesis, in 1917: “Everything is what it is because it got that way” [10].

1. Seebauer, E. G. & Noh, K. W. Mater. Sci. Eng. Rep. 70, 151-168 (2010).

2. Snyder, G. J. & Toberer, E. S. Nat. Mater. 7, 105-114 (2008).

3. Lahiri, J., Lin, Y., Bozkurt, P., Oleynik, I. I. & Batzill, M., Nat. Nanotech. 5, 326-329 (2010).

4. Ruelle, D. Physica A 113, 619-623 (1982).

5. Mackay, A. L. Struct. Chem. 13, 215-219 (2002).

6. Mackay, A. L. Physica A 114, 609-613 (1982).

7. Cartwright, J. H. E. & Macaky, A. L., Phil. Trans. R. Soc. A 370, 2807-2822 (2012).

8. Varn, D. P. & Crutchfield, J. P., preprint http://www.arxiv.org/abs/1409.5930 (2014).

9. Crutchfield, J. P., Nat. Phys. 8, 17-24 (2012).

10. Thompson, D’A. W., On Growth and Form. Cambridge University Press, Cambridge, 1917.

Friday, December 12, 2014

Why some junk DNA is selfish, but selfish genes are junk

“Horizontal gene transfer is more common than thought”: that's the message of a nice article in Aeon. I first came across it via a tweeted remark to the effect that this was the ultimate expression of the selfish gene. Why, genes are so selfish that they’ll even break the rules of inheritance by jumping right into the genomes of another species!

Now, that is some trick. I mean, the gene has to climb out of its native genome – and boy, those bonds are tough to break free from! – and then swim through the cytoplasm to the cell wall, wriggle through and then leap out fearlessly into the extracellular environment. There it has to live in hope of a passing cell before it gets degraded, and if it’s in luck then it takes out its diamond-tipped cutting tool and gets to work on…

Wait. You’re telling me the gene doesn’t do all this by itself? You’re saying that there is a host of genes in the donor cell that helps it happen, and a host of genes in the receiving cell to fix the new gene in place? But I thought the gene was being, you know, selfish? Instead, it’s as if it has sneaked into a house hoping to occupy it illegally, only to find a welcoming party offering it a cup of tea and a bed. Bah!

No, but look, I’m being far too literal about this selfishness, aren’t I? Well, aren’t I? Hmm, I wonder – because look, the Aeon page kindly directs me to another article by Itai Yanai and Martin Lercher that tells me all about what this selfishness business is all about.

And I think: have I wandered into 1976?

You see, this is what I find:

“Yet viewing our genome as an elegant and tidy blueprint for building humans misses a crucial fact: our genome does not exist to serve us humans at all. Instead, we exist to serve our genome, a collection of genes that have been surviving from time immemorial, skipping down the generations. These genes have evolved to build human ‘survival machines’, programmed as tools to make additional copies of the genes (by producing more humans who carry them in their genomes). From the cold-hearted view of biological reality, we exist only to ensure the survival of these travellers in our genomes... The selfish gene metaphor remains the single most relevant metaphor about our genome.”

Gosh, that really is cold-hearted isn’t it? It makes me feel so sad. But what leads these chaps to this unsparing conclusion, I wonder?

This: “From the viewpoint of natural selection, each gene is a long-lived replicator, its essential property being its ability to spawn copies.”

Then evolution, it seems, isn’t doing its job very well. Because, you see, I just took a gene and put it in a beaker and fed it with nucleotides, and it didn’t make a single copy. It was a rubbish replicator. So I tried another gene. Same thing. The funny thing was, the only way I could get the genes to replicate was to give them the molecular machinery and ingredients. Like in a PCR machine, say – but that’s like putting them on life support, right? The only way they’d do it without any real intervention was if I put the gene in a genome in a cell. So it really looked to me as though cells, not genes, were the replicators. Am I doing something wrong? After all, I am reliably informed that the gene “is on its own as a “replicator” – because “genes, but no other units in life’s hierarchy, make exact copies of themselves in a pool of such copies”. But genes no more “make exact copies of themselves in a pool of such copies” than printed pages (in a stack of other pages) make exact copies of themselves on the photocopier.

Oh, but silly me. Of course the genes don’t replicate by themselves! It is on its own as a replicator but doesn’t replicate on its own! (Got that?) No, you see, they can only do the job all together – ideally in a cell. “When looking at our genome”, say Yanai and Lercher, “we might take pride in how individual genes co-operate in order to build the human body in seemingly unselfish ways. But co-operation in making and maintaining a human body is just a highly successful strategy to make gene copies, perfectly consistent with selfishness.”

To be honest, I’ve never taken very much pride in what my genes do. But anyway: perfect consistent with selfishness? Let me see. I pay my taxes, I obey the laws, I contribute to charities that campaign for equality, I try to be nice to people, and a fair bit of this I do because I feel it is a pretty good thing to be a part of a society that functions well. I figure that’s probably best for me in the long run. Aha! – so what I do is perfectly consistent with selfishness. Well yes, but look, you’re not going to call me selfish just because I want to live in a well ordered society are you? No, but then I have intentions and thoughts of the future, I have acquired moral codes and so on – genes don’t have any of these things. Hmm… so how exactly does that make the metaphor of “selfishness” work? Or more precisely, how does it make selfishness a better metaphor than cooperativeness? If “genes don’t care”, then neither metaphor is better than the other. It’s just stuff that happens when genes get together.

But no, wait, maybe I’m missing the point. Genes are selfish because they compete with each other for limited resources, and only the best replicators – well no, only those housed in the cells or organisms that are themselves the best at replicating their genomes – survive. See, it says here: “Those genes that fail at replicating are no longer around, while even those that are good face stiff competition from other replicators. Only the best can secure the resources needed to reproduce themselves.”

This is the bullshit at the heart of the issue. “Good genes” face stiff competition from who exactly? Other replicators? So a phosphatase gene is competing with a dehydrogenase gene? (Yeah, who would win that fight?) No. No, no, no. This, folks, this is what I would bet countless people believe because of the bad metaphor of selfishness. Yet the phosphatase gene might well be doomed without the dehydrogenase gene. They need each other. They are really good friends. (These personification metaphors are great, aren’t they?) If the dehydrogenase gene gets better at its job, the phosphatase gene further down the genome just loves it, because she gets the benefit too! She just loves that better dehydrogenase. She goes round to his place and…

Hmm, these metaphors can get out of hand, can’t they?

No, if the dehydrogenase gene is competing with anyone, it’s with other alleles of the dehydrogenase gene. Genes aren’t in competition, alleles are.

(Actually even that doesn’t seem quite right. Organisms compete, and their genetic alleles affect their ability to compete. But this gives a sufficient appearance of competition among alleles that I can accept the use of the word.)

So genes only get replicated (by and large) in a genome. So if a gene is “improved” by natural selection, the whole genome benefits. But that’s just a side result – the gene doesn’t care about the others! Yet this is precisely the point. Because the gene “doesn’t care”, all you can talk about is what you see, not what you want to ascribe, metaphorically or otherwise, to a gene. An advantageous gene mutation helps the whole genome replicate. It’s not a question of who cares or who doesn’t, or what the gene “really” wants or doesn’t want. That is the outcome. “Selfishness” doesn’t help to elucidate that outcome – it confuses it.

“So why are we fooled into believing that humans (and animals and plants) rather than genes are what counts in biology?” the authors ask. They give an answer, but it’s not the right one. Higher organisms are a special case, of course, especially ones that reproduce sexually – it’s really cells that count. We’re “fooled” because cells can reproduce autonomously, but genes can’t.

So cells are where it’s at? Yes, and that’s why this article by Mike Lynch et al. calling for a meeting of evolutionary theory and cell biology is long overdue (PNAS 111, 16990; 2014). For one thing, it might temper erroneous statements like this one that Yanai and Lercher make: “Darwin showed that one simple logical principle [natural selection] could lead to all of the spectacular living design around us.” As Lynch and colleagues point out, there is abundant evidence that natural selection is one of several evolutionary processes that has shaped cells and simple organisms: “A commonly held but incorrect stance is that essentially all of evolution is a simple consequence of natural selection.” They point out, for example, that many pathways to greater complexity of both genomes and cells don’t confer any selective fitness.

The authors end with a tired Dawkinseque flourish: “we exist to serve our genome”. This statement has nothing to do with science – it is akin to the statement that “we are at the pinnacle of evolution”, but looking in the other direction. It is a little like saying that we exist to serve our minds – or that water falls on mountains in order to run downhill. It is not even wrong. We exist because of evolution, but not in order to do anything. Isn’t it strange how some who preen themselves on facing up to life’s lack of purpose then go right ahead and give it back a purpose?

The sad thing is that that Aeon article is actually all about junk DNA and what ENCODE has to say about it. It makes some fair criticisms of ENCODE’s dodgy definition of “function” for DNA. But it does so by examining the so-called LINE-1 elements in genomes, which are non-coding but just make copies of themselves. There used to be a word for this kind of DNA. Do you know what that word was? Selfish.

In the 1980s, geneticists and molecular biologists such as Francis Crick, Leslie Orgel and Ford Doolittle used “selfish DNA” in a strict sense, to refer to DNA sequences that just accumulated in genomes by making copies – and which did not itself affect the phenotype (W. F. Doolittle & C. Sapienza, Nature 284, p601, and L. E. Orgel & F. H. C. Crick, p604, 1980). This stuff not only had no function, it messed things up if it got too rife: it could eventually be deleterious to the genome that it infected. Now that’s what I call selfish! – something that acts in a way that is to its own benefit in the short term while benefitting nothing else, and which ultimately harms everything.

So you see, I’m not against the use of the selfish metaphor. I think that in its original sense it was just perfect. Its appropriation to describe the entire genome – as an attribute of all genes – wasn’t just misleading, it also devalued a perfectly good use of the term.

But all that seems to have been forgotten now. Could this be the result of some kind of meme, perhaps?

Now, that is some trick. I mean, the gene has to climb out of its native genome – and boy, those bonds are tough to break free from! – and then swim through the cytoplasm to the cell wall, wriggle through and then leap out fearlessly into the extracellular environment. There it has to live in hope of a passing cell before it gets degraded, and if it’s in luck then it takes out its diamond-tipped cutting tool and gets to work on…

Wait. You’re telling me the gene doesn’t do all this by itself? You’re saying that there is a host of genes in the donor cell that helps it happen, and a host of genes in the receiving cell to fix the new gene in place? But I thought the gene was being, you know, selfish? Instead, it’s as if it has sneaked into a house hoping to occupy it illegally, only to find a welcoming party offering it a cup of tea and a bed. Bah!

No, but look, I’m being far too literal about this selfishness, aren’t I? Well, aren’t I? Hmm, I wonder – because look, the Aeon page kindly directs me to another article by Itai Yanai and Martin Lercher that tells me all about what this selfishness business is all about.

And I think: have I wandered into 1976?

You see, this is what I find:

“Yet viewing our genome as an elegant and tidy blueprint for building humans misses a crucial fact: our genome does not exist to serve us humans at all. Instead, we exist to serve our genome, a collection of genes that have been surviving from time immemorial, skipping down the generations. These genes have evolved to build human ‘survival machines’, programmed as tools to make additional copies of the genes (by producing more humans who carry them in their genomes). From the cold-hearted view of biological reality, we exist only to ensure the survival of these travellers in our genomes... The selfish gene metaphor remains the single most relevant metaphor about our genome.”

Gosh, that really is cold-hearted isn’t it? It makes me feel so sad. But what leads these chaps to this unsparing conclusion, I wonder?

This: “From the viewpoint of natural selection, each gene is a long-lived replicator, its essential property being its ability to spawn copies.”

Then evolution, it seems, isn’t doing its job very well. Because, you see, I just took a gene and put it in a beaker and fed it with nucleotides, and it didn’t make a single copy. It was a rubbish replicator. So I tried another gene. Same thing. The funny thing was, the only way I could get the genes to replicate was to give them the molecular machinery and ingredients. Like in a PCR machine, say – but that’s like putting them on life support, right? The only way they’d do it without any real intervention was if I put the gene in a genome in a cell. So it really looked to me as though cells, not genes, were the replicators. Am I doing something wrong? After all, I am reliably informed that the gene “is on its own as a “replicator” – because “genes, but no other units in life’s hierarchy, make exact copies of themselves in a pool of such copies”. But genes no more “make exact copies of themselves in a pool of such copies” than printed pages (in a stack of other pages) make exact copies of themselves on the photocopier.

Oh, but silly me. Of course the genes don’t replicate by themselves! It is on its own as a replicator but doesn’t replicate on its own! (Got that?) No, you see, they can only do the job all together – ideally in a cell. “When looking at our genome”, say Yanai and Lercher, “we might take pride in how individual genes co-operate in order to build the human body in seemingly unselfish ways. But co-operation in making and maintaining a human body is just a highly successful strategy to make gene copies, perfectly consistent with selfishness.”

To be honest, I’ve never taken very much pride in what my genes do. But anyway: perfect consistent with selfishness? Let me see. I pay my taxes, I obey the laws, I contribute to charities that campaign for equality, I try to be nice to people, and a fair bit of this I do because I feel it is a pretty good thing to be a part of a society that functions well. I figure that’s probably best for me in the long run. Aha! – so what I do is perfectly consistent with selfishness. Well yes, but look, you’re not going to call me selfish just because I want to live in a well ordered society are you? No, but then I have intentions and thoughts of the future, I have acquired moral codes and so on – genes don’t have any of these things. Hmm… so how exactly does that make the metaphor of “selfishness” work? Or more precisely, how does it make selfishness a better metaphor than cooperativeness? If “genes don’t care”, then neither metaphor is better than the other. It’s just stuff that happens when genes get together.

But no, wait, maybe I’m missing the point. Genes are selfish because they compete with each other for limited resources, and only the best replicators – well no, only those housed in the cells or organisms that are themselves the best at replicating their genomes – survive. See, it says here: “Those genes that fail at replicating are no longer around, while even those that are good face stiff competition from other replicators. Only the best can secure the resources needed to reproduce themselves.”

This is the bullshit at the heart of the issue. “Good genes” face stiff competition from who exactly? Other replicators? So a phosphatase gene is competing with a dehydrogenase gene? (Yeah, who would win that fight?) No. No, no, no. This, folks, this is what I would bet countless people believe because of the bad metaphor of selfishness. Yet the phosphatase gene might well be doomed without the dehydrogenase gene. They need each other. They are really good friends. (These personification metaphors are great, aren’t they?) If the dehydrogenase gene gets better at its job, the phosphatase gene further down the genome just loves it, because she gets the benefit too! She just loves that better dehydrogenase. She goes round to his place and…

Hmm, these metaphors can get out of hand, can’t they?

No, if the dehydrogenase gene is competing with anyone, it’s with other alleles of the dehydrogenase gene. Genes aren’t in competition, alleles are.

(Actually even that doesn’t seem quite right. Organisms compete, and their genetic alleles affect their ability to compete. But this gives a sufficient appearance of competition among alleles that I can accept the use of the word.)

So genes only get replicated (by and large) in a genome. So if a gene is “improved” by natural selection, the whole genome benefits. But that’s just a side result – the gene doesn’t care about the others! Yet this is precisely the point. Because the gene “doesn’t care”, all you can talk about is what you see, not what you want to ascribe, metaphorically or otherwise, to a gene. An advantageous gene mutation helps the whole genome replicate. It’s not a question of who cares or who doesn’t, or what the gene “really” wants or doesn’t want. That is the outcome. “Selfishness” doesn’t help to elucidate that outcome – it confuses it.

“So why are we fooled into believing that humans (and animals and plants) rather than genes are what counts in biology?” the authors ask. They give an answer, but it’s not the right one. Higher organisms are a special case, of course, especially ones that reproduce sexually – it’s really cells that count. We’re “fooled” because cells can reproduce autonomously, but genes can’t.

So cells are where it’s at? Yes, and that’s why this article by Mike Lynch et al. calling for a meeting of evolutionary theory and cell biology is long overdue (PNAS 111, 16990; 2014). For one thing, it might temper erroneous statements like this one that Yanai and Lercher make: “Darwin showed that one simple logical principle [natural selection] could lead to all of the spectacular living design around us.” As Lynch and colleagues point out, there is abundant evidence that natural selection is one of several evolutionary processes that has shaped cells and simple organisms: “A commonly held but incorrect stance is that essentially all of evolution is a simple consequence of natural selection.” They point out, for example, that many pathways to greater complexity of both genomes and cells don’t confer any selective fitness.

The authors end with a tired Dawkinseque flourish: “we exist to serve our genome”. This statement has nothing to do with science – it is akin to the statement that “we are at the pinnacle of evolution”, but looking in the other direction. It is a little like saying that we exist to serve our minds – or that water falls on mountains in order to run downhill. It is not even wrong. We exist because of evolution, but not in order to do anything. Isn’t it strange how some who preen themselves on facing up to life’s lack of purpose then go right ahead and give it back a purpose?

The sad thing is that that Aeon article is actually all about junk DNA and what ENCODE has to say about it. It makes some fair criticisms of ENCODE’s dodgy definition of “function” for DNA. But it does so by examining the so-called LINE-1 elements in genomes, which are non-coding but just make copies of themselves. There used to be a word for this kind of DNA. Do you know what that word was? Selfish.

In the 1980s, geneticists and molecular biologists such as Francis Crick, Leslie Orgel and Ford Doolittle used “selfish DNA” in a strict sense, to refer to DNA sequences that just accumulated in genomes by making copies – and which did not itself affect the phenotype (W. F. Doolittle & C. Sapienza, Nature 284, p601, and L. E. Orgel & F. H. C. Crick, p604, 1980). This stuff not only had no function, it messed things up if it got too rife: it could eventually be deleterious to the genome that it infected. Now that’s what I call selfish! – something that acts in a way that is to its own benefit in the short term while benefitting nothing else, and which ultimately harms everything.

So you see, I’m not against the use of the selfish metaphor. I think that in its original sense it was just perfect. Its appropriation to describe the entire genome – as an attribute of all genes – wasn’t just misleading, it also devalued a perfectly good use of the term.

But all that seems to have been forgotten now. Could this be the result of some kind of meme, perhaps?

Monday, December 08, 2014

Chemistry for the kids - a view from the vaults

At some point this is all going to become a more coherently thought-out piece, but right now I just want to show you some of the Chemical Heritage Foundation’s fabulous collection of chemistry kits through the ages. It is going to form the basis of an exhibition at some point in the future, so consider this a preview.

There is an entire social history to be told through these boxes of chemistry for kids.

Here's one of the earliest examples, in which the chemicals come in rather fetching little wooden bottles. That’s the spirit, old chap!

I like the warning on this one: if you’re too little or too dumb to read the instructions, keep your hands off.

Sartorial tips here for the young chemist, sadly unheeded today. Tuck those ties in, mind – you don’t want them dipping in the acid. Lots of the US kits, like this one, were made by A. C. Gilbert Co. of New Haven, Connecticut, which became one of the biggest toy manufacturers in the world. The intriguing thing is that the company began in 1909 as a supplier of materials for magic shows – Alfred Gilbert was a magician. So even at this time, the link between stage magic and chemical demonstrations, which had been established in the nineteenth century, was still evident.

Girls, as you know, cannot grow up to be scientists. But if they apply themselves, they might be able to adapt their natural domestic skills to become lab technicians. Of course, they’ll only want this set if it is in pink.

But if that makes you cringe, it got far worse. Some chemistry sets were still marketed as magic shows even in the 1940s and 50s. Of course, this required that you dress up as some exotic Eastern fellow, like a “Hindu prince or Rajah”. And he needs an assistant, who should be “made up as an Ethiopian slave”. “His face and arms should be blackened with burned cork… By all means assign him a fantastic name such as Allah, Kola, Rota or any foreign-sounding word.” Remember now, these kits were probably being given to the fine young boys who would have been formulating US foreign policy in the 1970s and 80s (or, God help us, even now).

OK, so boys and girls can both do it in this British kit, provided that they have this rather weird amalgam of kitchen and lab.

Don’t look too closely, though, at the Periodic Tables pinned to the walls on either side. With apologies for the rubbish image from my phone camera, I think you can get the idea here.

This is one of my favourites. It includes “Safe experiments in atomic energy”, which you can conduct with a bit of uranium ore. Apparently, some of the Gilbert kits also included a Geiger counter. Make sure an adult helps you, kids!

Here are the manuals for it – part magic, part nuclear.

But we are not so reckless today. Oh no. Instead, you get 35 “fun activities”… with “no chemicals”. Well, I should jolly well hope not!

This one speaks volumes about its times, which you can see at a glance was the 1970s. It is not exactly a chemistry kit in the usual sense, because for once the kids are doing their experiments outside. Now they are not making chemicals, but testing for them: looking for signs of pollution and contamination in the air and the waters. Johnny Horizon is here to save the world from the silent spring.

There is still a whiff of the old connection with magic here, and with the alchemical books of secrets (which are the subject of the CHF exhibition that brought me here).

But here we are now. This looks a little more like it.

What a contrast this is from the clean, shiny brave new world of yesteryear.

Many thanks to the CHF folks for dragging these things from their vaults.

Wednesday, December 03, 2014

Pushing the boundaries

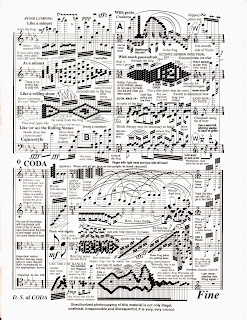

Here is my latest Music Instinct column for Sapere magazine. I collected some wonderful examples of absurdly complicated scores while working on this, but none with quite the same self-mocking wit as the one above.

___________________________________________________________________

There’s no accounting for taste, as people say, usually when they are deploring someone else’s. But there is. Psychological tests since the 1960s have suggested that what people tend to look for in music, as in visual art, is an optimal level of complexity: not too much, not too little. The graph of preference versus complexity looks like an inverted U [1].

Thus we soon grow weary of nursery rhymes, but complex experimental jazz is a decidedly niche phenomenon. So what is the ideal level of musical complexity? By some estimates, fairly low. The Beatles’ music has been shown to get generally more complex from 1962 to 1970, based on analysis of the rhythmic patterns, statistics of melodic pitch-change sequences and other factors [2]. And as complexity increased, sales declined. Of course, there’s surely more to the trend than that, but it implies that “All My Loving” is about as far as most of us will go.

But mightn’t there be value – artistic, if not commercial – in exploring the extremes of simplicity and complexity? Classical musicians evidently think so, whether it is the two-note drones of 1960s ultra-minimalist La Monte Young or the formidable, rapid-fire density of the “New Complexity” school of Brian Ferneyhough. Few listeners, it must be said, want to stay in these rather austere sonic landscapes for long.

But musical complexity needn’t be ideologically driven torture. J. S. Bach sometimes stacked up as many as six of his overlapping fugal voices, while the chromatic density of György Ligeti’s Atmosphères, with up to 56 string instruments all playing different notes, made perfect sense when used as the soundtrack to Stanley Kubrick’s 2001: A Space Odyssey.

The question is: what can the human mind handle? We have trouble following two conversations at once, but we seem able to handle musical polyphony without too much trouble. There are clearly limits to how much information we can process, but on the whole we probably sell ourselves short. Studies show that the more original and unpredictable music is, the more attentive we are to it – and often, relatively little exposure is needed before a move towards the complex end of the spectrum ceases to be tedious or confusing and becomes pleasurable. Acculturation can work wonders. Before pop music colonized Indonesia, gamelan was its pop music – and that, according to one measure of complexity rooted in information theory, is perhaps the most complex major musical style in the world.

1. Davies, J. B. The Psychology of Music. Hutchinson, London, 1978.

2. Eerola, T. & North, A. C. ‘Expectancy-based model of melodic complexity’, in Proceedings of the 6th International Conference of Music Perception and Cognition (Keele University, August 2000), eds Woods, C., Luck, G., Brochard, R., Seddon, F. & Sloboda, J. Keele University, 2000.

Wednesday, November 26, 2014

Hidden truths

I had meant to put up this piece – my October Crucible column for Chemistry World – some time back, so as to have the opportunity to show more of the amazing images of Liu Bolin. So anyway, here it is.

____________________________________________________________________

When I first set eyes on Liu Bolin, I didn’t see him at all. The Chinese artist has been dubbed the “Invisible Man”, because he uses extraordinarily precise body-painting to hide himself against all manner of backgrounds: shelves of magazines or tins in supermarkets, government propaganda posters, the Great Wall. What could easily seem in the West to be a clever gimmick becomes a deeply political statement in China, where invisibility serves as a metaphor for the way the state is perceived to ignore or “vanish” the ordinary citizen, while the rampant profiteering of the booming Chinese economy turns individuals into faceless consumers of meaningless products. In one of his most provocative works, Liu stands in front of the iconic portrait of Mao Zedong in Tiananmen Square, painted so that just his head and shoulders seem to be superimposed over those of the Great Helmsman. In another, a policeman grasps what appears to be a totally transparent, almost invisible Liu, or places his hands over the artist’s invisible eyes.

More recently Liu has commented on the degradation of China’s environment and the chemical adulteration of its food and drink. Some of these images are displayed in a new exhibition, A Colorful World?, at the Klein Sun Gallery in New York, which opened on 11 September. The title refers to “the countless multicolored advertisements and consumer goods that cloud today’s understanding of oppression and injustice.” But it’s not just their vulgarity and waste that Liu wants to point out, for in China there has sometimes been far more to fear from foods than their garish packaging. “The bright and colorful packaging of these snack foods convey a lighthearted feeling of joy and happiness, but what they truly provide is hazardous to human health”, the exhibition’s press release suggests. It’s bad enough that the foods are laden with carcinogens and additives, but several recent food scandals in China have revealed the presence of highly dangerous compounds. In 2008, some leading brands of powdered milk and infant formula were found to contain melamine, a toxic carcinogen added to boost the apparent protein content and so allow the milk to be diluted without failing quality standards. Melamine can cause kidney stones – several babies died from that condition, while many thousands were hospitalized.

There have been several other cases of foods treated with hormones and other hazardous cosmetic additives. The most recent involves the use of phthalate plasticizers in soft drinks as cheap replacements for palm oil. These compounds may be carcinogenic and are thought to disrupt the endocrine and reproductive systems by mimicking hormones. In his 2011 work Plasticizer, Liu commented on this use of such additives by “disappearing” in front of supermarket shelves of soft drinks.

So there is no knee-jerk chemophobia in these works, which represent a genuine attempt to highlight the lack of accountability and malpractice exercised within the food industry – and not just in China. The same is true of Liu’s Cancer Village, part of his Hiding in the City series in which he and others are painted to vanish against Chinese landscapes. The series began as a protest against the demolition of an artists’ village near Beijing in 2005 – Liu vanished amongst the rubble – but Cancer Village illustrates the invisibility and official “non-existence” of ordinary citizens in the face of a much more grievous threat: chemical pollution from factories, which seems likely to be the cause of a massive increase in cancer incidence in the village of the 23 people who Liu and his assistants have merged into a field behind which a chemical plant looms.

Such politically charged performance art walks a delicate line in China. Artists there have refined their approach to combine a lyrical, even playful obliqueness – less easily attacked by censors – with resonant symbolism. When in 1994 Wang Jin emptied 50 kg of red organic pigment into the Red Flag Canal in Henan Province for his work Battling the Flood, it was a sly comment not just on the uncritical “Red China” rhetoric of the Mao era (when the canal was dug) but also on the terrible bloodshed of that period.

It could equally have been a statement about the lamentable state of China’s waterways, where pollution, much of it from virtually unregulated chemicals plants, has rendered most of the river water fit only for industrial uses. That was certainly the concern highlighted by Yin Xiuzhen’s 1995 work Washing the River, in which the artist froze blocks of water from the polluted Funan River in Chengdu and stacked them on the banks. Passers-by were invited to “wash” the ice with clean water in an act that echoed the purifying ritual of baptism. This year Yin has recreated Washing the River on the polluted Derwent River near Hobart, Tasmania.

These are creative and sometimes moving responses to problems with both technological and political causes. They should be welcomed by scientists, who are good at spotting such problems but sometimes struggle to elicit a public reaction to them.

Thursday, November 20, 2014

The science and politics of gentrification

I figured that, after the tribulations of touching on the sensitive subject of genes and behaviour, it might be some light relief to turn to the interesting subject of urban development, and specifically a new paper looking at the morphological characteristics shared by parts of London that have recently undergone gentrification. I was attracted to the study partly because the locales it considers are so familiar to me – two of the areas, Brixton and Telegraph Hill, are just down the road from me in southeast London. But this also presented an opportunity to talk about the emerging approach to urban theory that regards cities as having “natural histories” amenable to exploration using scientific tools and concepts that were developed to understand morphological change and growth in the natural world. It’s an approach that I believe is proving very fruitful in terms of understanding how and why cities evolve.

Well, so I fondly imagined as I wrote the piece below for the Guardian. Now, I’m not totally naïve – I realise that gentrification is a sensitive subject given the decidedly mixed nature of the results. Districts might become safer and more family-friendly, not to mention the fact that they serve better coffee; but at the same time they can become unaffordable to many locals, stripped of some of their traditional character, and prey to predatory developers.

I’ve seen this happen in my own area of East Dulwich. I always feel that, when I say to Londoners that this is where I live, I have to quickly explain that we moved here 20 years ago when properties were cheap and the main street was fairly run-down and populated by a mixture of quirky but decidedly un-chi-chi shops. There is no way we could afford to move here today. So yes, the parks and cafés are all very nice, but I’ve mixed feelings about the demographic shifts and I’m dismayed by the stupid property prices.

So it was no surprise to find such mixed feelings reflected in the readers’ comments on my piece, many of which were pleasingly thoughtful and interesting compared to the snarky feedback one often gets on Comment is Free. But what I hadn’t anticipated was the Twitter response, where there seemed to be a sense in some quarters that my remarks on gentrification betrayed a rather sinister agenda. Admittedly, some of this was just ideological cant (ironically of a Marxist persuasion, I suspect), but some of the critical feedback offered food for thought on how notions of self-organization and complexity applied to urban growth can be received – responses that I hadn’t anticipated or encountered before.

Some felt that to regard gentrification, or any other aspect of urban development, as something akin to a “natural” process is to offer a convenient smokescreen for the fact that many such processes are driven by profit, greed and venality – or as some would say, by capital. To consider these processes in naturalistic terms, they said, is to risk disguising their political and economic origins, and to belittle the sometimes dismaying social consequences. Indeed, it seems that for some people this whole approach smacks of a kind of social Darwinism of the same ilk as that which proclaims that inequalities are natural and inevitable and that it is therefore fruitless to deplore them.

I can understand this point of view to some degree, for certainly a “naturalism” based on a misappropriation of Darwinian ideas has been used in the past to excuse the rapaciousness of capitalist economies. But to imagine that this is what a modern “complexity” approach to social phenomena is all about seems to me to reflect a deep and possibly even dangerous confusion. The aim of such work is, in general, to understand how certain consequences emerge from the social and institutional structures we create. These consequences might sometimes be highly non-intuitive in ways that simple cause-and-effect narratives can’t hope to capture. They are intended to be partly descriptive – what are the key features that characterize such phenomena; how are they different in different instances? – and partly explanatory. Often the methods involve agent-based modelling, starting from the question: if the agents are allowed or constrained to interact in such and such a way, what are the results likely to be? In the current case, the question is: why do some areas undergo gentrification, but not others? I can’t begin to imagine why, regardless of how you feel about gentrification or what its socio-political causes might be, that question would not be of interest.

But to see such explorations as a kind of justification of what it is they seek to understand is to totally misconstrue the object. Look at it this way. There has been a great deal of work done on traffic flows, in particular to understand how they break down and become congested. It would be bizarre to suggest that such studies are seeking to excuse or justify congestion. On the contrary, their aim is generally to find the causative influences of congestion, so that traffic rules or networks might be better designed to avoid it. Similarly, attempts to understand economic crashes and recessions using ideas from complex-systems theory don’t for a moment take as their starting point the idea that, if we can show why such events emerge from our existing economic systems, we have somehow shown that these are things we must just lie down and accept. (Indeed, amongst other things they can counter the foolish delusion, popular pre-2008, that such fluctuations are a thing of the past.)

Now, you might say that I did however begin my Guardian piece with the suggestion that (according to the researchers who did the work it describes) gentrification is “almost a law of nature”. This is certainly what the paper implies – that this phenomenon is simply a part of the cyclic change and renewal that cities experience. Surely one would hope – I would hope – that this change happens in terms of relatively deprived neighbourhoods experiencing an improvement in facilities and amenities, rather than by the kind of wholesale demolition that was deemed necessary for the Heygate estate. But the bad aspects of gentrification include inadequate provision for existing residents and businesses affected by the soaring prices, loss of local character, uninhibited property grabs, and so on. It’s complicated, for sure.

(A word here: some folks in the Guardian comments have deplored the Heygate development, and my impression has been that some residents were compelled to move against their wishes. I rather fear that there is some truth too in the suggestions that the people who will benefit most will be property developers. But I spent a considerable amount of time in the mid-1990s in the adjacent Aylesbury estate, a similarly hideous brutalist high-rise development plagued with social problems. Any sense of community that existed there – and there certainly was some – survived in spite of the disastrous social planning that had created these “living” spaces, not because of it. Moreover, I think we should be extremely wary of romanticizing a development that owed its existence to very similar circumstances: it was built on the ruins of postwar slums where deprivation coexisted with a sense of community, and from which residents were similarly rehoused or displaced.)

In any event, it is essential that studies of complexity and self-organization in social systems are never used to justify the status quo. They need to be accompanied by moral and ethical decisions about the kind of society we want to have. The whole point of much of this work, especially in agent-based modelling, is to show us the potential consequences of the choices we make: if we set things up this way, the result is likely to be this. They might save us from striving for solutions that are simply not attainable, or not unless we make some changes to the underlying rules. This, of course, is nothing more that Hume’s admonition not to confuse an “is” with an “ought”. The theories and models by no means absolve us from the responsibility of deciding what kind of society we want; indeed, they might hopefully challenge prejudices and dogmas about that. I hesitate to suggest that this approach is “apolitical”, since science rarely is, and in social science in particular it is very hard to be sure that we do not pose a question in a way that embodies or endorses certain preconceptions. And I feel that sometimes this kind of research operates in too much of a political vacuum, as though the researchers are reluctant to acknowledge or explore the real social and political implications of their work.

So I am glad that the issue came up. Here’s the article.

___________________________________________________________________________

Grumble all you like that Brixton’s covered market, once called a “24-hour supermarket” by the local police, has been colonised by trendy boutique restaurants. The fact is that the gentrification of what was once an edgy part of London is almost a law of nature.

“Urban gentrification”, say Sergio Porta, professor of urban design at Strathclyde University in Glasgow, and his coworkers in London and Italy in a new paper, “is a natural force underpinning the evolution of cities.” Their research reveals that Brixton shares features in common with other once down-at-heel London districts that have recently seen the invasion of farmer’s markets and designer coffee shops, such as Battersea and Telegraph Hill. These characteristics, they say, make such neighbourhoods ripe for gentrification.

Whether it is the Northern Quarter in Manchester, Harlem in New York or pretty much everywhere in central Paris, gentrification is rife in the world’s major cities. You know the signs: one minute the local pub gets a facelift, the next minute everyone is reading the Guardian and sipping lattes, and you daren’t even look at the property prices.

The implications for demographics, crime, transport and economics make it vital for planners and local authorities to get a grasp of what drives gentrification. Urban theorists have debated that for decades. According to one view the artists kick it off, as they did in Notting Hill, moving into cheap housing and transforming the area from poor to bohemian – then investors and families follow. Another view says that the developers and public agencies come first, buying up cheap property and then selling it for a profit to the middle classes.

Porta and his colleagues have focused instead on the physical attributes that seem to make an area ripe for – or vulnerable to, depending on your view – gentrification. Do different neighbourhoods share the same features? The team looked at five parts of London that have gone upmarket in the past decade or so: Brixton, Battersea, Telegraph Hill, Barnsbury and Dalston.

All of them are some distance from the city centre. The housing is typically dense but modest: undistinguished terraced houses two or three storeys high, often of Victorian vintage. “This picture is pretty much that of a traditional neighborhood, far away from the modernist model of big buildings”, says Porta.

But the key issue, the researchers say, is how the local street network is arranged, and how it is plugged into the rest of the city. Each street can be assigned a value of the awkwardly named “betweenness centrality”: a measure of how likely you are to pass along or across it on the shortest path between any two points in the area. It’s a purely geometric quantity that can be calculated directly from a map.

All of the five districts in the study have major roads with high betweenness centrality along their borders, but not through their centres. These roads provide good connections to the rest of the city without disrupting the neighbourhood. Smaller “local main” streets penetrate inside the district, providing easy access but not noise or danger. “It’s this balance between calmness and urban buzz within easy reach that is one of the conditions for gentrification”, says Porta. These conclusions rely only on geography: on what anyone can go and measure for themselves, not on the particular history of a neighbourhood or the plans of councillors and developers. Looked at this way, the researchers are studying city evolution much as biologists study natural evolution – almost as if the city itself were a natural organism.

This idea that cities obey laws beyond the reach of planning goes back to social theorist Lewis Mumford in the 1930s, who described the growth of cities as “amoeboid”. It was developed in the 1950s by the influential urban theorist Jane Jacobs, who argued that the forced redevelopment of American inner cities was destroying their inherent vibrancy.

Jacobs’ views on the spontaneous self-organization of urban environments anticipated modern work on ecosystems and other natural “complex systems”. Many urban theorists now believe that city growth should be considered a kind of natural history, and be studied scientifically using the tools of complexity theory rather than being forced to conform to some planner’s idea of how growth should occur.

Gentrification is not just “natural” but healthy for cities, Porta says: it’s a reflection of their ability to adapt, a facet of their resilience. The alternative for areas that lack the prerequisites – for example, modernist tower blocks, which cannot acquire the magic values of housing density and frontage height – is the wrecker’s ball, like that recently taken to the notorious Heygate estate in south London.

The new findings could have predicted that fate. By the same token, they might indicate where gentrification will happen next. Porta is wary of forecasting that without proper research, but he says that Lower Tooting is one area with all the right features, and looks set to become the new Balham, just as Balham was the new Clapham.

[Postscript: I told Sergio that I would give full credit to his coworkers. They are Alessandro Venerandi of University College London, Mattia Zanella of the University of Ferrara, and Ombretta Romice of the University of Strathclyde.]

Well, so I fondly imagined as I wrote the piece below for the Guardian. Now, I’m not totally naïve – I realise that gentrification is a sensitive subject given the decidedly mixed nature of the results. Districts might become safer and more family-friendly, not to mention the fact that they serve better coffee; but at the same time they can become unaffordable to many locals, stripped of some of their traditional character, and prey to predatory developers.

I’ve seen this happen in my own area of East Dulwich. I always feel that, when I say to Londoners that this is where I live, I have to quickly explain that we moved here 20 years ago when properties were cheap and the main street was fairly run-down and populated by a mixture of quirky but decidedly un-chi-chi shops. There is no way we could afford to move here today. So yes, the parks and cafés are all very nice, but I’ve mixed feelings about the demographic shifts and I’m dismayed by the stupid property prices.

So it was no surprise to find such mixed feelings reflected in the readers’ comments on my piece, many of which were pleasingly thoughtful and interesting compared to the snarky feedback one often gets on Comment is Free. But what I hadn’t anticipated was the Twitter response, where there seemed to be a sense in some quarters that my remarks on gentrification betrayed a rather sinister agenda. Admittedly, some of this was just ideological cant (ironically of a Marxist persuasion, I suspect), but some of the critical feedback offered food for thought on how notions of self-organization and complexity applied to urban growth can be received – responses that I hadn’t anticipated or encountered before.

Some felt that to regard gentrification, or any other aspect of urban development, as something akin to a “natural” process is to offer a convenient smokescreen for the fact that many such processes are driven by profit, greed and venality – or as some would say, by capital. To consider these processes in naturalistic terms, they said, is to risk disguising their political and economic origins, and to belittle the sometimes dismaying social consequences. Indeed, it seems that for some people this whole approach smacks of a kind of social Darwinism of the same ilk as that which proclaims that inequalities are natural and inevitable and that it is therefore fruitless to deplore them.

I can understand this point of view to some degree, for certainly a “naturalism” based on a misappropriation of Darwinian ideas has been used in the past to excuse the rapaciousness of capitalist economies. But to imagine that this is what a modern “complexity” approach to social phenomena is all about seems to me to reflect a deep and possibly even dangerous confusion. The aim of such work is, in general, to understand how certain consequences emerge from the social and institutional structures we create. These consequences might sometimes be highly non-intuitive in ways that simple cause-and-effect narratives can’t hope to capture. They are intended to be partly descriptive – what are the key features that characterize such phenomena; how are they different in different instances? – and partly explanatory. Often the methods involve agent-based modelling, starting from the question: if the agents are allowed or constrained to interact in such and such a way, what are the results likely to be? In the current case, the question is: why do some areas undergo gentrification, but not others? I can’t begin to imagine why, regardless of how you feel about gentrification or what its socio-political causes might be, that question would not be of interest.

But to see such explorations as a kind of justification of what it is they seek to understand is to totally misconstrue the object. Look at it this way. There has been a great deal of work done on traffic flows, in particular to understand how they break down and become congested. It would be bizarre to suggest that such studies are seeking to excuse or justify congestion. On the contrary, their aim is generally to find the causative influences of congestion, so that traffic rules or networks might be better designed to avoid it. Similarly, attempts to understand economic crashes and recessions using ideas from complex-systems theory don’t for a moment take as their starting point the idea that, if we can show why such events emerge from our existing economic systems, we have somehow shown that these are things we must just lie down and accept. (Indeed, amongst other things they can counter the foolish delusion, popular pre-2008, that such fluctuations are a thing of the past.)

Now, you might say that I did however begin my Guardian piece with the suggestion that (according to the researchers who did the work it describes) gentrification is “almost a law of nature”. This is certainly what the paper implies – that this phenomenon is simply a part of the cyclic change and renewal that cities experience. Surely one would hope – I would hope – that this change happens in terms of relatively deprived neighbourhoods experiencing an improvement in facilities and amenities, rather than by the kind of wholesale demolition that was deemed necessary for the Heygate estate. But the bad aspects of gentrification include inadequate provision for existing residents and businesses affected by the soaring prices, loss of local character, uninhibited property grabs, and so on. It’s complicated, for sure.

(A word here: some folks in the Guardian comments have deplored the Heygate development, and my impression has been that some residents were compelled to move against their wishes. I rather fear that there is some truth too in the suggestions that the people who will benefit most will be property developers. But I spent a considerable amount of time in the mid-1990s in the adjacent Aylesbury estate, a similarly hideous brutalist high-rise development plagued with social problems. Any sense of community that existed there – and there certainly was some – survived in spite of the disastrous social planning that had created these “living” spaces, not because of it. Moreover, I think we should be extremely wary of romanticizing a development that owed its existence to very similar circumstances: it was built on the ruins of postwar slums where deprivation coexisted with a sense of community, and from which residents were similarly rehoused or displaced.)

In any event, it is essential that studies of complexity and self-organization in social systems are never used to justify the status quo. They need to be accompanied by moral and ethical decisions about the kind of society we want to have. The whole point of much of this work, especially in agent-based modelling, is to show us the potential consequences of the choices we make: if we set things up this way, the result is likely to be this. They might save us from striving for solutions that are simply not attainable, or not unless we make some changes to the underlying rules. This, of course, is nothing more that Hume’s admonition not to confuse an “is” with an “ought”. The theories and models by no means absolve us from the responsibility of deciding what kind of society we want; indeed, they might hopefully challenge prejudices and dogmas about that. I hesitate to suggest that this approach is “apolitical”, since science rarely is, and in social science in particular it is very hard to be sure that we do not pose a question in a way that embodies or endorses certain preconceptions. And I feel that sometimes this kind of research operates in too much of a political vacuum, as though the researchers are reluctant to acknowledge or explore the real social and political implications of their work.

So I am glad that the issue came up. Here’s the article.

___________________________________________________________________________

Grumble all you like that Brixton’s covered market, once called a “24-hour supermarket” by the local police, has been colonised by trendy boutique restaurants. The fact is that the gentrification of what was once an edgy part of London is almost a law of nature.

“Urban gentrification”, say Sergio Porta, professor of urban design at Strathclyde University in Glasgow, and his coworkers in London and Italy in a new paper, “is a natural force underpinning the evolution of cities.” Their research reveals that Brixton shares features in common with other once down-at-heel London districts that have recently seen the invasion of farmer’s markets and designer coffee shops, such as Battersea and Telegraph Hill. These characteristics, they say, make such neighbourhoods ripe for gentrification.

Whether it is the Northern Quarter in Manchester, Harlem in New York or pretty much everywhere in central Paris, gentrification is rife in the world’s major cities. You know the signs: one minute the local pub gets a facelift, the next minute everyone is reading the Guardian and sipping lattes, and you daren’t even look at the property prices.

The implications for demographics, crime, transport and economics make it vital for planners and local authorities to get a grasp of what drives gentrification. Urban theorists have debated that for decades. According to one view the artists kick it off, as they did in Notting Hill, moving into cheap housing and transforming the area from poor to bohemian – then investors and families follow. Another view says that the developers and public agencies come first, buying up cheap property and then selling it for a profit to the middle classes.

Porta and his colleagues have focused instead on the physical attributes that seem to make an area ripe for – or vulnerable to, depending on your view – gentrification. Do different neighbourhoods share the same features? The team looked at five parts of London that have gone upmarket in the past decade or so: Brixton, Battersea, Telegraph Hill, Barnsbury and Dalston.

All of them are some distance from the city centre. The housing is typically dense but modest: undistinguished terraced houses two or three storeys high, often of Victorian vintage. “This picture is pretty much that of a traditional neighborhood, far away from the modernist model of big buildings”, says Porta.

But the key issue, the researchers say, is how the local street network is arranged, and how it is plugged into the rest of the city. Each street can be assigned a value of the awkwardly named “betweenness centrality”: a measure of how likely you are to pass along or across it on the shortest path between any two points in the area. It’s a purely geometric quantity that can be calculated directly from a map.

All of the five districts in the study have major roads with high betweenness centrality along their borders, but not through their centres. These roads provide good connections to the rest of the city without disrupting the neighbourhood. Smaller “local main” streets penetrate inside the district, providing easy access but not noise or danger. “It’s this balance between calmness and urban buzz within easy reach that is one of the conditions for gentrification”, says Porta. These conclusions rely only on geography: on what anyone can go and measure for themselves, not on the particular history of a neighbourhood or the plans of councillors and developers. Looked at this way, the researchers are studying city evolution much as biologists study natural evolution – almost as if the city itself were a natural organism.

This idea that cities obey laws beyond the reach of planning goes back to social theorist Lewis Mumford in the 1930s, who described the growth of cities as “amoeboid”. It was developed in the 1950s by the influential urban theorist Jane Jacobs, who argued that the forced redevelopment of American inner cities was destroying their inherent vibrancy.

Jacobs’ views on the spontaneous self-organization of urban environments anticipated modern work on ecosystems and other natural “complex systems”. Many urban theorists now believe that city growth should be considered a kind of natural history, and be studied scientifically using the tools of complexity theory rather than being forced to conform to some planner’s idea of how growth should occur.

Gentrification is not just “natural” but healthy for cities, Porta says: it’s a reflection of their ability to adapt, a facet of their resilience. The alternative for areas that lack the prerequisites – for example, modernist tower blocks, which cannot acquire the magic values of housing density and frontage height – is the wrecker’s ball, like that recently taken to the notorious Heygate estate in south London.

The new findings could have predicted that fate. By the same token, they might indicate where gentrification will happen next. Porta is wary of forecasting that without proper research, but he says that Lower Tooting is one area with all the right features, and looks set to become the new Balham, just as Balham was the new Clapham.

[Postscript: I told Sergio that I would give full credit to his coworkers. They are Alessandro Venerandi of University College London, Mattia Zanella of the University of Ferrara, and Ombretta Romice of the University of Strathclyde.]

Wednesday, November 19, 2014

Too funky in here

This piece for my Music Instinct column in Sapere magazine should be out and about in Italian any time now. I’ve talked about this deal before, but no harm in returning to it with the right soundtrack.

_______________________________________________________________________

I’ve got plans to discuss some seriously challenging music in the next column, so in this one I’m going to treat you. Look up Bootsy Collins’ song Stretchin’ Out and make sure you have room to dance. Pure hedonism, isn’t it? Well, maybe not, actually – because Bootsy is taking splendid advantage of our cognitive predispositions in order to do several things at once. That gusty and fabulously ornate bass guitar is laying down the syncopated groove that gets the feet twitching, the backing vocalists provide the blissed-out melody, and the sax and guitar solos ice the cake with their soaring improvisations.

Yes, there’s a lot going on. But the roles of each of these “voices” in the mix aren’t arbitrary. It now seems that the high-pitched vocals and solos take care of melodic duties because we hear melody best in the high register, while Bootsy’s bass thumps out the rhythm because our ears and minds are best attuned to rhythmic structures at low pitches.

This division of labour isn’t, after all, just a feature of funk: it seems to happen throughout the music of the world. The pianist’s left hand is usually her rhythmic anchor and her right is the source of melody, whether she’s playing Haydn or boogie-woogie. Low-pitched percussive instruments carry the rhythm in Indian classical music (where high-register sitar takes the melodic role) or Indonesian gamelan.

Psychologist Laurel Trainor and her collaborators in Canada have shed light on why this is. When they studied people listening to polyphonic music – which has several simultaneous melodic lines or “voices” – using magnetic sensors to monitor the subjects’ electrical brain activity, they found that each voice was stored as a separate “memory trace”, and that the most salient voice (the one listeners were most attuned to) was the one with the highest pitch.

In more recent experiments using electrical sensors to detect the characteristic brain responses to “errors” in what a listener hears, Trainor and colleagues found that the opposite is true for rhythm. The lower-pitched the tones are, the better we are at discriminating differences in their timing. This pitch-dependent sensitivity to rhythm, the researchers concluded, arises from the basic physiology of the inner ear, where the cochlea converts acoustic waves to nerve signals. We’re designed, it seems, for Bootsy’s freaky funk-outs.

Monday, November 17, 2014

Genes and IQ: some clarifications

I hadn’t anticipated that my article for Prospect on the “language of genes” would spark a discussion so focused on the question of the inheritability of IQ (which was just a small part of what the piece was about). Judging from the reverberations on Twitter, it looks as though some further clarifications might be useful.

The point of my article is not to contest whether cognitive abilities are heritable. Clearly they are. The point is that this fact does not necessarily imply it is meaningful to therefore talk about “genes for” that those abilities. Geneticists might argue that this is a straw man – that they recognize very well that the “genes for” trope is often unhelpful. This is true up to a point, but my argument is that this recognition came later than it needed to, and that even now the narratives and rhetoric used even in academic research on genetics and genomics fails to distance itself sufficiently from that legacy.

As for the issue of genes and IQ, I’ve said already that the evidence is contended, and it seems clear that there is a fair degree of polarization on the matter that is not helping the debate.

First, I should state clearly that there is very good reason to believe that, whatever cognitive abilities IQ tests measure, these abilities are inheritable to a significant degree. Of course one can argue about what exactly it is that IQ testing measures, and the limitations of those tests are widely (and rightly) advertised. But whatever the rights and wrong of using IQ as a measure of “intelligence”, or of using academic achievement as an indication of a person’s intellectual capacity, I believe that such “formal” measures tell us something about an individual’s cognitive abilities and that there is reason to believe that these are to some degree genetic.