I’ve a short review online with Prospect of The Future of the Brain (Princeton University Press), edited by Gary Marcus & Jeremy Freeman. Here’s a slightly longer version. But I will say more about the book and topic in my Prospect blog soon.

_________________________________________________________________________

If you want a breezy, whistle-stop tour of the latest brain science, look elsewhere. But if you’re up for chunky, rather technical expositions by real experts, this book repays the effort. The message lies in the very (and sometimes bewildering) diversity of the contributions: despite its dazzling array of methods to study the brain, from fMRI to genetic techniques for labeling and activating individual neurons, this is still a primitive field largely devoid of conceptual and theoretical frameworks. As the editors put it, “Where some organs make sense almost immediately once we understand their constituent parts, the brain’s operating principles continue to elude us.”

Among the stimulating ideas on offer is neuroscientist Anthony Zador’s suggestion that the brain might lack unifying principles, but merely gets the job done with a makeshift “bag of tricks”. There’s fodder too for sociologists of science: several contributions evince the spirit of current projects that aim to amass dizzying amounts of data about how neurons are connected, seemingly in the blind hope that insight will fall out of the maps once they are detailed enough.

All the more reason, then, for the skeptical voices reminding us that “data analysis isn’t theory”, that current neuroscience is “a collection of facts rather than ideas”, and that we don’t even know what kind of computer the brain is. All the same, the “future” of the title might be astonishing: will “neural dust” scattered through the brain record all our thoughts? And would you want that uploaded to the Cloud?

Thursday, December 18, 2014

Wednesday, December 17, 2014

The restoration of Chartres: second thoughts

Several people have asked me what I think about the “restoration” of Chartres Cathedral, in the light of the recent piece by Martin Filler for the New York Review of Books. (Here, in case you’re wondering, is why anyone should wish to solicit my humble opinion on the matter.) I have commented on this before here, but the more I hear about the work, the less sanguine I feel. Filler makes some good arguments against, the most salient, I think, being the fact that this contravenes normal conservation protocols: the usual approach now, especially for paintings, is to do what we can to rectify damage (such as reattaching flakes of paint) but otherwise to leave alone. In my earlier blog I mentioned the case of York Cathedral, where masons actively replace old, crumbling masonry with new – but this is a necessary affair to preserve the integrity of the building, whereas slapping on a lick of paint isn’t. And the faux marble on the columns looks particularly hideous and unnecessary. To judge from the photos, the restoration looks far more tacky than I had anticipated.

It is perhaps not ideal that visitors to Chartres come away thinking that the wonderful, stark gloom is what worshippers in the Middle Ages would have experienced too. But it seems unlikely that the new paint job is going to get anyone closer to an authentic experience. Worse, it’s the kind of thing that, once done, is very hard to undo. It’s good to recognize that the reverence with which we generally treat the fabric of old buildings now is very different from the attitudes of earlier times – bishops would demand that structures be knocked down when they looked too old-fashioned and replaced with something à la mode, and during the nineteenth-century Gothic revival architects like Eugène Viollet-le-Duc would take all kinds of liberties with their “restorations”. But this is no reason why we should act the same way. So while there is still a part of me that is intrigued by the thought of being able to see the interior of Chartres in something close to its original state, I have come round to thinking that the cathedral should have been left alone.

Monday, December 15, 2014

Beyond the crystal

Here’s my Material Witness column from the November issue of Nature Materials (13, 1003; 2014).

___________________________________________________________________________

The International Year of Crystallography has understandably been a celebration of order. From Rene-Just Haüy’s prescient drawings of stacked cubes to the convolutions of membrane proteins, Nature’s Milestones in Crystallography revealed a discipline able to tackle increasingly complex and subtle forms of atomic-scale regularity. But it seems fitting, as the year draws to a close, to recognize that the road ahead is far less tidy. Whether it is the introduction of defects to control semiconductor band structure [1], the nanoscale disorder that can improve the performance of thermoelectric materials [2], or the creation of nanoscale conduction pathways in graphene [3], the future of solid-state materials physics seems increasingly to depend on a delicate balance of crystallinity and its violation. In biology, the notion of “structure” has always been less congruent with periodicity, but ever since Schrödinger’s famous “aperiodic crystal” there has been a recognition that a deeper order may underpin the apparent molecular turmoil of life.

The decision to redefine crystallinity to encompass the not-quite-regularity of quasicrystals is, then, just the tip of the iceberg when it comes to widening the scope of crystallography. Even before quasicrystals were discovered, Ruelle asked if there might exist “turbulent crystals” without long-ranged order, exhibiting fuzzy diffraction peaks [4]. The goal of “generalizing” crystallography beyond its regular domain has been pursued most energetically by Mackay [5], who anticipated the link between quasicrystals and quasiperiodic tilings [6]. More recently, Cartwright and Mackay have suggested that structures such as crystals might be best characterized not by their degree of order as such but by the algorithmic complexity of the process by which they are made – making generalized crystallography an information science [7]. As Mackay proposed, “a crystal is a structure the description of which is much smaller than the structure itself, and this view leads to the consideration of structures as carriers of information and on to wider concerns with growth, form, morphogenesis, and life itself” [5].

These ideas have now been developed by Varn and Crutchfield to provide what they call an information-theoretic measure for describing materials structure [8]. Their aim is to devise a formal tool for characterizing the hitherto somewhat hazy notion of disorder in materials, thereby providing a framework that can encompass anything from perfect crystals to totally amorphous materials, all within a rubric of “chaotic crystallography”.

Their approach is again algorithmic. They introduce the concept of “ε-machines”, which are minimal operations that transform one state into another [9]: for example, one ε-machine can represent the appearance of a random growth fault. Varn and Crutchfield present nine ε-machines relevant to crystallography, and show how their operation to generate a particular structure is a kind of computation that can be assigned a Shannon entropy, like more familiar computations involving symbolic manipulations. Any particular structure or arrangement of components can then be specified in terms of an initially periodic arrangement of components and the amount of ε-machine computation needed to generate from it the structure in question. The authors demonstrate how, for a simple one-dimensional case, diffraction data can be inverted to reconstruct the ε-machine that describes the disordered material structure.

Quite how this will play out in classifying and distinguishing real materials structures remains to be seen. But it surely underscores the point made by D’Arcy Thompson, the pioneer of morphogenesis, in 1917: “Everything is what it is because it got that way” [10].

1. Seebauer, E. G. & Noh, K. W. Mater. Sci. Eng. Rep. 70, 151-168 (2010).

2. Snyder, G. J. & Toberer, E. S. Nat. Mater. 7, 105-114 (2008).

3. Lahiri, J., Lin, Y., Bozkurt, P., Oleynik, I. I. & Batzill, M., Nat. Nanotech. 5, 326-329 (2010).

4. Ruelle, D. Physica A 113, 619-623 (1982).

5. Mackay, A. L. Struct. Chem. 13, 215-219 (2002).

6. Mackay, A. L. Physica A 114, 609-613 (1982).

7. Cartwright, J. H. E. & Macaky, A. L., Phil. Trans. R. Soc. A 370, 2807-2822 (2012).

8. Varn, D. P. & Crutchfield, J. P., preprint http://www.arxiv.org/abs/1409.5930 (2014).

9. Crutchfield, J. P., Nat. Phys. 8, 17-24 (2012).

10. Thompson, D’A. W., On Growth and Form. Cambridge University Press, Cambridge, 1917.

___________________________________________________________________________

The International Year of Crystallography has understandably been a celebration of order. From Rene-Just Haüy’s prescient drawings of stacked cubes to the convolutions of membrane proteins, Nature’s Milestones in Crystallography revealed a discipline able to tackle increasingly complex and subtle forms of atomic-scale regularity. But it seems fitting, as the year draws to a close, to recognize that the road ahead is far less tidy. Whether it is the introduction of defects to control semiconductor band structure [1], the nanoscale disorder that can improve the performance of thermoelectric materials [2], or the creation of nanoscale conduction pathways in graphene [3], the future of solid-state materials physics seems increasingly to depend on a delicate balance of crystallinity and its violation. In biology, the notion of “structure” has always been less congruent with periodicity, but ever since Schrödinger’s famous “aperiodic crystal” there has been a recognition that a deeper order may underpin the apparent molecular turmoil of life.

The decision to redefine crystallinity to encompass the not-quite-regularity of quasicrystals is, then, just the tip of the iceberg when it comes to widening the scope of crystallography. Even before quasicrystals were discovered, Ruelle asked if there might exist “turbulent crystals” without long-ranged order, exhibiting fuzzy diffraction peaks [4]. The goal of “generalizing” crystallography beyond its regular domain has been pursued most energetically by Mackay [5], who anticipated the link between quasicrystals and quasiperiodic tilings [6]. More recently, Cartwright and Mackay have suggested that structures such as crystals might be best characterized not by their degree of order as such but by the algorithmic complexity of the process by which they are made – making generalized crystallography an information science [7]. As Mackay proposed, “a crystal is a structure the description of which is much smaller than the structure itself, and this view leads to the consideration of structures as carriers of information and on to wider concerns with growth, form, morphogenesis, and life itself” [5].

These ideas have now been developed by Varn and Crutchfield to provide what they call an information-theoretic measure for describing materials structure [8]. Their aim is to devise a formal tool for characterizing the hitherto somewhat hazy notion of disorder in materials, thereby providing a framework that can encompass anything from perfect crystals to totally amorphous materials, all within a rubric of “chaotic crystallography”.

Their approach is again algorithmic. They introduce the concept of “ε-machines”, which are minimal operations that transform one state into another [9]: for example, one ε-machine can represent the appearance of a random growth fault. Varn and Crutchfield present nine ε-machines relevant to crystallography, and show how their operation to generate a particular structure is a kind of computation that can be assigned a Shannon entropy, like more familiar computations involving symbolic manipulations. Any particular structure or arrangement of components can then be specified in terms of an initially periodic arrangement of components and the amount of ε-machine computation needed to generate from it the structure in question. The authors demonstrate how, for a simple one-dimensional case, diffraction data can be inverted to reconstruct the ε-machine that describes the disordered material structure.

Quite how this will play out in classifying and distinguishing real materials structures remains to be seen. But it surely underscores the point made by D’Arcy Thompson, the pioneer of morphogenesis, in 1917: “Everything is what it is because it got that way” [10].

1. Seebauer, E. G. & Noh, K. W. Mater. Sci. Eng. Rep. 70, 151-168 (2010).

2. Snyder, G. J. & Toberer, E. S. Nat. Mater. 7, 105-114 (2008).

3. Lahiri, J., Lin, Y., Bozkurt, P., Oleynik, I. I. & Batzill, M., Nat. Nanotech. 5, 326-329 (2010).

4. Ruelle, D. Physica A 113, 619-623 (1982).

5. Mackay, A. L. Struct. Chem. 13, 215-219 (2002).

6. Mackay, A. L. Physica A 114, 609-613 (1982).

7. Cartwright, J. H. E. & Macaky, A. L., Phil. Trans. R. Soc. A 370, 2807-2822 (2012).

8. Varn, D. P. & Crutchfield, J. P., preprint http://www.arxiv.org/abs/1409.5930 (2014).

9. Crutchfield, J. P., Nat. Phys. 8, 17-24 (2012).

10. Thompson, D’A. W., On Growth and Form. Cambridge University Press, Cambridge, 1917.

Friday, December 12, 2014

Why some junk DNA is selfish, but selfish genes are junk

“Horizontal gene transfer is more common than thought”: that's the message of a nice article in Aeon. I first came across it via a tweeted remark to the effect that this was the ultimate expression of the selfish gene. Why, genes are so selfish that they’ll even break the rules of inheritance by jumping right into the genomes of another species!

Now, that is some trick. I mean, the gene has to climb out of its native genome – and boy, those bonds are tough to break free from! – and then swim through the cytoplasm to the cell wall, wriggle through and then leap out fearlessly into the extracellular environment. There it has to live in hope of a passing cell before it gets degraded, and if it’s in luck then it takes out its diamond-tipped cutting tool and gets to work on…

Wait. You’re telling me the gene doesn’t do all this by itself? You’re saying that there is a host of genes in the donor cell that helps it happen, and a host of genes in the receiving cell to fix the new gene in place? But I thought the gene was being, you know, selfish? Instead, it’s as if it has sneaked into a house hoping to occupy it illegally, only to find a welcoming party offering it a cup of tea and a bed. Bah!

No, but look, I’m being far too literal about this selfishness, aren’t I? Well, aren’t I? Hmm, I wonder – because look, the Aeon page kindly directs me to another article by Itai Yanai and Martin Lercher that tells me all about what this selfishness business is all about.

And I think: have I wandered into 1976?

You see, this is what I find:

“Yet viewing our genome as an elegant and tidy blueprint for building humans misses a crucial fact: our genome does not exist to serve us humans at all. Instead, we exist to serve our genome, a collection of genes that have been surviving from time immemorial, skipping down the generations. These genes have evolved to build human ‘survival machines’, programmed as tools to make additional copies of the genes (by producing more humans who carry them in their genomes). From the cold-hearted view of biological reality, we exist only to ensure the survival of these travellers in our genomes... The selfish gene metaphor remains the single most relevant metaphor about our genome.”

Gosh, that really is cold-hearted isn’t it? It makes me feel so sad. But what leads these chaps to this unsparing conclusion, I wonder?

This: “From the viewpoint of natural selection, each gene is a long-lived replicator, its essential property being its ability to spawn copies.”

Then evolution, it seems, isn’t doing its job very well. Because, you see, I just took a gene and put it in a beaker and fed it with nucleotides, and it didn’t make a single copy. It was a rubbish replicator. So I tried another gene. Same thing. The funny thing was, the only way I could get the genes to replicate was to give them the molecular machinery and ingredients. Like in a PCR machine, say – but that’s like putting them on life support, right? The only way they’d do it without any real intervention was if I put the gene in a genome in a cell. So it really looked to me as though cells, not genes, were the replicators. Am I doing something wrong? After all, I am reliably informed that the gene “is on its own as a “replicator” – because “genes, but no other units in life’s hierarchy, make exact copies of themselves in a pool of such copies”. But genes no more “make exact copies of themselves in a pool of such copies” than printed pages (in a stack of other pages) make exact copies of themselves on the photocopier.

Oh, but silly me. Of course the genes don’t replicate by themselves! It is on its own as a replicator but doesn’t replicate on its own! (Got that?) No, you see, they can only do the job all together – ideally in a cell. “When looking at our genome”, say Yanai and Lercher, “we might take pride in how individual genes co-operate in order to build the human body in seemingly unselfish ways. But co-operation in making and maintaining a human body is just a highly successful strategy to make gene copies, perfectly consistent with selfishness.”

To be honest, I’ve never taken very much pride in what my genes do. But anyway: perfect consistent with selfishness? Let me see. I pay my taxes, I obey the laws, I contribute to charities that campaign for equality, I try to be nice to people, and a fair bit of this I do because I feel it is a pretty good thing to be a part of a society that functions well. I figure that’s probably best for me in the long run. Aha! – so what I do is perfectly consistent with selfishness. Well yes, but look, you’re not going to call me selfish just because I want to live in a well ordered society are you? No, but then I have intentions and thoughts of the future, I have acquired moral codes and so on – genes don’t have any of these things. Hmm… so how exactly does that make the metaphor of “selfishness” work? Or more precisely, how does it make selfishness a better metaphor than cooperativeness? If “genes don’t care”, then neither metaphor is better than the other. It’s just stuff that happens when genes get together.

But no, wait, maybe I’m missing the point. Genes are selfish because they compete with each other for limited resources, and only the best replicators – well no, only those housed in the cells or organisms that are themselves the best at replicating their genomes – survive. See, it says here: “Those genes that fail at replicating are no longer around, while even those that are good face stiff competition from other replicators. Only the best can secure the resources needed to reproduce themselves.”

This is the bullshit at the heart of the issue. “Good genes” face stiff competition from who exactly? Other replicators? So a phosphatase gene is competing with a dehydrogenase gene? (Yeah, who would win that fight?) No. No, no, no. This, folks, this is what I would bet countless people believe because of the bad metaphor of selfishness. Yet the phosphatase gene might well be doomed without the dehydrogenase gene. They need each other. They are really good friends. (These personification metaphors are great, aren’t they?) If the dehydrogenase gene gets better at its job, the phosphatase gene further down the genome just loves it, because she gets the benefit too! She just loves that better dehydrogenase. She goes round to his place and…

Hmm, these metaphors can get out of hand, can’t they?

No, if the dehydrogenase gene is competing with anyone, it’s with other alleles of the dehydrogenase gene. Genes aren’t in competition, alleles are.

(Actually even that doesn’t seem quite right. Organisms compete, and their genetic alleles affect their ability to compete. But this gives a sufficient appearance of competition among alleles that I can accept the use of the word.)

So genes only get replicated (by and large) in a genome. So if a gene is “improved” by natural selection, the whole genome benefits. But that’s just a side result – the gene doesn’t care about the others! Yet this is precisely the point. Because the gene “doesn’t care”, all you can talk about is what you see, not what you want to ascribe, metaphorically or otherwise, to a gene. An advantageous gene mutation helps the whole genome replicate. It’s not a question of who cares or who doesn’t, or what the gene “really” wants or doesn’t want. That is the outcome. “Selfishness” doesn’t help to elucidate that outcome – it confuses it.

“So why are we fooled into believing that humans (and animals and plants) rather than genes are what counts in biology?” the authors ask. They give an answer, but it’s not the right one. Higher organisms are a special case, of course, especially ones that reproduce sexually – it’s really cells that count. We’re “fooled” because cells can reproduce autonomously, but genes can’t.

So cells are where it’s at? Yes, and that’s why this article by Mike Lynch et al. calling for a meeting of evolutionary theory and cell biology is long overdue (PNAS 111, 16990; 2014). For one thing, it might temper erroneous statements like this one that Yanai and Lercher make: “Darwin showed that one simple logical principle [natural selection] could lead to all of the spectacular living design around us.” As Lynch and colleagues point out, there is abundant evidence that natural selection is one of several evolutionary processes that has shaped cells and simple organisms: “A commonly held but incorrect stance is that essentially all of evolution is a simple consequence of natural selection.” They point out, for example, that many pathways to greater complexity of both genomes and cells don’t confer any selective fitness.

The authors end with a tired Dawkinseque flourish: “we exist to serve our genome”. This statement has nothing to do with science – it is akin to the statement that “we are at the pinnacle of evolution”, but looking in the other direction. It is a little like saying that we exist to serve our minds – or that water falls on mountains in order to run downhill. It is not even wrong. We exist because of evolution, but not in order to do anything. Isn’t it strange how some who preen themselves on facing up to life’s lack of purpose then go right ahead and give it back a purpose?

The sad thing is that that Aeon article is actually all about junk DNA and what ENCODE has to say about it. It makes some fair criticisms of ENCODE’s dodgy definition of “function” for DNA. But it does so by examining the so-called LINE-1 elements in genomes, which are non-coding but just make copies of themselves. There used to be a word for this kind of DNA. Do you know what that word was? Selfish.

In the 1980s, geneticists and molecular biologists such as Francis Crick, Leslie Orgel and Ford Doolittle used “selfish DNA” in a strict sense, to refer to DNA sequences that just accumulated in genomes by making copies – and which did not itself affect the phenotype (W. F. Doolittle & C. Sapienza, Nature 284, p601, and L. E. Orgel & F. H. C. Crick, p604, 1980). This stuff not only had no function, it messed things up if it got too rife: it could eventually be deleterious to the genome that it infected. Now that’s what I call selfish! – something that acts in a way that is to its own benefit in the short term while benefitting nothing else, and which ultimately harms everything.

So you see, I’m not against the use of the selfish metaphor. I think that in its original sense it was just perfect. Its appropriation to describe the entire genome – as an attribute of all genes – wasn’t just misleading, it also devalued a perfectly good use of the term.

But all that seems to have been forgotten now. Could this be the result of some kind of meme, perhaps?

Now, that is some trick. I mean, the gene has to climb out of its native genome – and boy, those bonds are tough to break free from! – and then swim through the cytoplasm to the cell wall, wriggle through and then leap out fearlessly into the extracellular environment. There it has to live in hope of a passing cell before it gets degraded, and if it’s in luck then it takes out its diamond-tipped cutting tool and gets to work on…

Wait. You’re telling me the gene doesn’t do all this by itself? You’re saying that there is a host of genes in the donor cell that helps it happen, and a host of genes in the receiving cell to fix the new gene in place? But I thought the gene was being, you know, selfish? Instead, it’s as if it has sneaked into a house hoping to occupy it illegally, only to find a welcoming party offering it a cup of tea and a bed. Bah!

No, but look, I’m being far too literal about this selfishness, aren’t I? Well, aren’t I? Hmm, I wonder – because look, the Aeon page kindly directs me to another article by Itai Yanai and Martin Lercher that tells me all about what this selfishness business is all about.

And I think: have I wandered into 1976?

You see, this is what I find:

“Yet viewing our genome as an elegant and tidy blueprint for building humans misses a crucial fact: our genome does not exist to serve us humans at all. Instead, we exist to serve our genome, a collection of genes that have been surviving from time immemorial, skipping down the generations. These genes have evolved to build human ‘survival machines’, programmed as tools to make additional copies of the genes (by producing more humans who carry them in their genomes). From the cold-hearted view of biological reality, we exist only to ensure the survival of these travellers in our genomes... The selfish gene metaphor remains the single most relevant metaphor about our genome.”

Gosh, that really is cold-hearted isn’t it? It makes me feel so sad. But what leads these chaps to this unsparing conclusion, I wonder?

This: “From the viewpoint of natural selection, each gene is a long-lived replicator, its essential property being its ability to spawn copies.”

Then evolution, it seems, isn’t doing its job very well. Because, you see, I just took a gene and put it in a beaker and fed it with nucleotides, and it didn’t make a single copy. It was a rubbish replicator. So I tried another gene. Same thing. The funny thing was, the only way I could get the genes to replicate was to give them the molecular machinery and ingredients. Like in a PCR machine, say – but that’s like putting them on life support, right? The only way they’d do it without any real intervention was if I put the gene in a genome in a cell. So it really looked to me as though cells, not genes, were the replicators. Am I doing something wrong? After all, I am reliably informed that the gene “is on its own as a “replicator” – because “genes, but no other units in life’s hierarchy, make exact copies of themselves in a pool of such copies”. But genes no more “make exact copies of themselves in a pool of such copies” than printed pages (in a stack of other pages) make exact copies of themselves on the photocopier.

Oh, but silly me. Of course the genes don’t replicate by themselves! It is on its own as a replicator but doesn’t replicate on its own! (Got that?) No, you see, they can only do the job all together – ideally in a cell. “When looking at our genome”, say Yanai and Lercher, “we might take pride in how individual genes co-operate in order to build the human body in seemingly unselfish ways. But co-operation in making and maintaining a human body is just a highly successful strategy to make gene copies, perfectly consistent with selfishness.”

To be honest, I’ve never taken very much pride in what my genes do. But anyway: perfect consistent with selfishness? Let me see. I pay my taxes, I obey the laws, I contribute to charities that campaign for equality, I try to be nice to people, and a fair bit of this I do because I feel it is a pretty good thing to be a part of a society that functions well. I figure that’s probably best for me in the long run. Aha! – so what I do is perfectly consistent with selfishness. Well yes, but look, you’re not going to call me selfish just because I want to live in a well ordered society are you? No, but then I have intentions and thoughts of the future, I have acquired moral codes and so on – genes don’t have any of these things. Hmm… so how exactly does that make the metaphor of “selfishness” work? Or more precisely, how does it make selfishness a better metaphor than cooperativeness? If “genes don’t care”, then neither metaphor is better than the other. It’s just stuff that happens when genes get together.

But no, wait, maybe I’m missing the point. Genes are selfish because they compete with each other for limited resources, and only the best replicators – well no, only those housed in the cells or organisms that are themselves the best at replicating their genomes – survive. See, it says here: “Those genes that fail at replicating are no longer around, while even those that are good face stiff competition from other replicators. Only the best can secure the resources needed to reproduce themselves.”

This is the bullshit at the heart of the issue. “Good genes” face stiff competition from who exactly? Other replicators? So a phosphatase gene is competing with a dehydrogenase gene? (Yeah, who would win that fight?) No. No, no, no. This, folks, this is what I would bet countless people believe because of the bad metaphor of selfishness. Yet the phosphatase gene might well be doomed without the dehydrogenase gene. They need each other. They are really good friends. (These personification metaphors are great, aren’t they?) If the dehydrogenase gene gets better at its job, the phosphatase gene further down the genome just loves it, because she gets the benefit too! She just loves that better dehydrogenase. She goes round to his place and…

Hmm, these metaphors can get out of hand, can’t they?

No, if the dehydrogenase gene is competing with anyone, it’s with other alleles of the dehydrogenase gene. Genes aren’t in competition, alleles are.

(Actually even that doesn’t seem quite right. Organisms compete, and their genetic alleles affect their ability to compete. But this gives a sufficient appearance of competition among alleles that I can accept the use of the word.)

So genes only get replicated (by and large) in a genome. So if a gene is “improved” by natural selection, the whole genome benefits. But that’s just a side result – the gene doesn’t care about the others! Yet this is precisely the point. Because the gene “doesn’t care”, all you can talk about is what you see, not what you want to ascribe, metaphorically or otherwise, to a gene. An advantageous gene mutation helps the whole genome replicate. It’s not a question of who cares or who doesn’t, or what the gene “really” wants or doesn’t want. That is the outcome. “Selfishness” doesn’t help to elucidate that outcome – it confuses it.

“So why are we fooled into believing that humans (and animals and plants) rather than genes are what counts in biology?” the authors ask. They give an answer, but it’s not the right one. Higher organisms are a special case, of course, especially ones that reproduce sexually – it’s really cells that count. We’re “fooled” because cells can reproduce autonomously, but genes can’t.

So cells are where it’s at? Yes, and that’s why this article by Mike Lynch et al. calling for a meeting of evolutionary theory and cell biology is long overdue (PNAS 111, 16990; 2014). For one thing, it might temper erroneous statements like this one that Yanai and Lercher make: “Darwin showed that one simple logical principle [natural selection] could lead to all of the spectacular living design around us.” As Lynch and colleagues point out, there is abundant evidence that natural selection is one of several evolutionary processes that has shaped cells and simple organisms: “A commonly held but incorrect stance is that essentially all of evolution is a simple consequence of natural selection.” They point out, for example, that many pathways to greater complexity of both genomes and cells don’t confer any selective fitness.

The authors end with a tired Dawkinseque flourish: “we exist to serve our genome”. This statement has nothing to do with science – it is akin to the statement that “we are at the pinnacle of evolution”, but looking in the other direction. It is a little like saying that we exist to serve our minds – or that water falls on mountains in order to run downhill. It is not even wrong. We exist because of evolution, but not in order to do anything. Isn’t it strange how some who preen themselves on facing up to life’s lack of purpose then go right ahead and give it back a purpose?

The sad thing is that that Aeon article is actually all about junk DNA and what ENCODE has to say about it. It makes some fair criticisms of ENCODE’s dodgy definition of “function” for DNA. But it does so by examining the so-called LINE-1 elements in genomes, which are non-coding but just make copies of themselves. There used to be a word for this kind of DNA. Do you know what that word was? Selfish.

In the 1980s, geneticists and molecular biologists such as Francis Crick, Leslie Orgel and Ford Doolittle used “selfish DNA” in a strict sense, to refer to DNA sequences that just accumulated in genomes by making copies – and which did not itself affect the phenotype (W. F. Doolittle & C. Sapienza, Nature 284, p601, and L. E. Orgel & F. H. C. Crick, p604, 1980). This stuff not only had no function, it messed things up if it got too rife: it could eventually be deleterious to the genome that it infected. Now that’s what I call selfish! – something that acts in a way that is to its own benefit in the short term while benefitting nothing else, and which ultimately harms everything.

So you see, I’m not against the use of the selfish metaphor. I think that in its original sense it was just perfect. Its appropriation to describe the entire genome – as an attribute of all genes – wasn’t just misleading, it also devalued a perfectly good use of the term.

But all that seems to have been forgotten now. Could this be the result of some kind of meme, perhaps?

Monday, December 08, 2014

Chemistry for the kids - a view from the vaults

At some point this is all going to become a more coherently thought-out piece, but right now I just want to show you some of the Chemical Heritage Foundation’s fabulous collection of chemistry kits through the ages. It is going to form the basis of an exhibition at some point in the future, so consider this a preview.

There is an entire social history to be told through these boxes of chemistry for kids.

Here's one of the earliest examples, in which the chemicals come in rather fetching little wooden bottles. That’s the spirit, old chap!

I like the warning on this one: if you’re too little or too dumb to read the instructions, keep your hands off.

Sartorial tips here for the young chemist, sadly unheeded today. Tuck those ties in, mind – you don’t want them dipping in the acid. Lots of the US kits, like this one, were made by A. C. Gilbert Co. of New Haven, Connecticut, which became one of the biggest toy manufacturers in the world. The intriguing thing is that the company began in 1909 as a supplier of materials for magic shows – Alfred Gilbert was a magician. So even at this time, the link between stage magic and chemical demonstrations, which had been established in the nineteenth century, was still evident.

Girls, as you know, cannot grow up to be scientists. But if they apply themselves, they might be able to adapt their natural domestic skills to become lab technicians. Of course, they’ll only want this set if it is in pink.

But if that makes you cringe, it got far worse. Some chemistry sets were still marketed as magic shows even in the 1940s and 50s. Of course, this required that you dress up as some exotic Eastern fellow, like a “Hindu prince or Rajah”. And he needs an assistant, who should be “made up as an Ethiopian slave”. “His face and arms should be blackened with burned cork… By all means assign him a fantastic name such as Allah, Kola, Rota or any foreign-sounding word.” Remember now, these kits were probably being given to the fine young boys who would have been formulating US foreign policy in the 1970s and 80s (or, God help us, even now).

OK, so boys and girls can both do it in this British kit, provided that they have this rather weird amalgam of kitchen and lab.

Don’t look too closely, though, at the Periodic Tables pinned to the walls on either side. With apologies for the rubbish image from my phone camera, I think you can get the idea here.

This is one of my favourites. It includes “Safe experiments in atomic energy”, which you can conduct with a bit of uranium ore. Apparently, some of the Gilbert kits also included a Geiger counter. Make sure an adult helps you, kids!

Here are the manuals for it – part magic, part nuclear.

But we are not so reckless today. Oh no. Instead, you get 35 “fun activities”… with “no chemicals”. Well, I should jolly well hope not!

This one speaks volumes about its times, which you can see at a glance was the 1970s. It is not exactly a chemistry kit in the usual sense, because for once the kids are doing their experiments outside. Now they are not making chemicals, but testing for them: looking for signs of pollution and contamination in the air and the waters. Johnny Horizon is here to save the world from the silent spring.

There is still a whiff of the old connection with magic here, and with the alchemical books of secrets (which are the subject of the CHF exhibition that brought me here).

But here we are now. This looks a little more like it.

What a contrast this is from the clean, shiny brave new world of yesteryear.

Many thanks to the CHF folks for dragging these things from their vaults.

Wednesday, December 03, 2014

Pushing the boundaries

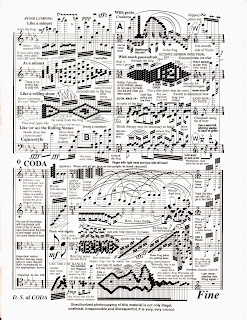

Here is my latest Music Instinct column for Sapere magazine. I collected some wonderful examples of absurdly complicated scores while working on this, but none with quite the same self-mocking wit as the one above.

___________________________________________________________________

There’s no accounting for taste, as people say, usually when they are deploring someone else’s. But there is. Psychological tests since the 1960s have suggested that what people tend to look for in music, as in visual art, is an optimal level of complexity: not too much, not too little. The graph of preference versus complexity looks like an inverted U [1].

Thus we soon grow weary of nursery rhymes, but complex experimental jazz is a decidedly niche phenomenon. So what is the ideal level of musical complexity? By some estimates, fairly low. The Beatles’ music has been shown to get generally more complex from 1962 to 1970, based on analysis of the rhythmic patterns, statistics of melodic pitch-change sequences and other factors [2]. And as complexity increased, sales declined. Of course, there’s surely more to the trend than that, but it implies that “All My Loving” is about as far as most of us will go.

But mightn’t there be value – artistic, if not commercial – in exploring the extremes of simplicity and complexity? Classical musicians evidently think so, whether it is the two-note drones of 1960s ultra-minimalist La Monte Young or the formidable, rapid-fire density of the “New Complexity” school of Brian Ferneyhough. Few listeners, it must be said, want to stay in these rather austere sonic landscapes for long.

But musical complexity needn’t be ideologically driven torture. J. S. Bach sometimes stacked up as many as six of his overlapping fugal voices, while the chromatic density of György Ligeti’s Atmosphères, with up to 56 string instruments all playing different notes, made perfect sense when used as the soundtrack to Stanley Kubrick’s 2001: A Space Odyssey.

The question is: what can the human mind handle? We have trouble following two conversations at once, but we seem able to handle musical polyphony without too much trouble. There are clearly limits to how much information we can process, but on the whole we probably sell ourselves short. Studies show that the more original and unpredictable music is, the more attentive we are to it – and often, relatively little exposure is needed before a move towards the complex end of the spectrum ceases to be tedious or confusing and becomes pleasurable. Acculturation can work wonders. Before pop music colonized Indonesia, gamelan was its pop music – and that, according to one measure of complexity rooted in information theory, is perhaps the most complex major musical style in the world.

1. Davies, J. B. The Psychology of Music. Hutchinson, London, 1978.

2. Eerola, T. & North, A. C. ‘Expectancy-based model of melodic complexity’, in Proceedings of the 6th International Conference of Music Perception and Cognition (Keele University, August 2000), eds Woods, C., Luck, G., Brochard, R., Seddon, F. & Sloboda, J. Keele University, 2000.

Subscribe to:

Comments (Atom)