No option

There is an excellent article in today’s Guardian by the author John Lanchester, who turns out to have a surprisingly (but after all, why not?) thorough understanding of the derivatives market. Lanchester’s piece is motivated by the extraordinary losses chalked up by rogue trader Jérôme Kerviel of the French bank Société Générale. Kerviel’s exploits seem to be provoking the predictable shock-horror about the kind of person entrusted with the world’s finances (as though the last 20 years had never happened). I suspect it was Lanchester’s intention to leave it unstated, but one can’t read his piece without a mounting sense that the derivatives market is one of humankind’s more deranged inventions. To bemoan that is not in itself terribly productive, since it is not clear how one legislates against the situation where one person bets an insane amount of (someone else's) money on an event of which he (not she, on the whole) has not the slightest real idea of the outcome, and another person says ‘you’re on!’. All the same, it is hard to quibble with Lanchester’s conclusion that “If our laws are not extended to control the new kinds of super-powerful, super-complex, and potentially super-risky investment vehicles, they will one day cause a financial disaster of global-systemic proportions.”

All this makes me appreciate that, while I have been a small voice among many to have criticized the conventional models of economics, in fact economists are only the poor chaps trying to make sense of the lunacy that is the economy. Which brings me to Fischer Black and Myron Scholes, who, Lanchester explains, published a paper in 1973 that gave a formula for how to price derivatives (specifically, options). What Lanchester doesn’t mention is that this Nobel-winning work made the assumption that the volatility of the market – the fluctuations in prices – follows the form dictated by a normal or Gaussian distribution. The problem is that it doesn’t. This is what I said about that in my book Critical Mass:

“Options are supposed to be relatively tame derivatives—thanks to the Black-Scholes model, which has been described as ‘the most successful theory not only in finance but in all of economics’. Black and Scholes considered the question of strategy: what is the best price for the buyer, and how can both the buyer and the writer minimize the risks? It was assumed that the buyer would be given a ‘risk discount’ that reflects the uncertainty in the stock price covered by the option he or she takes out. Scholes and Black proposed that these premiums are already inherent in the stock price, since riskier stock sells for relatively less than its expected future value than does safer stock.

Based on this idea, the two went on to devise a formula for calculating the ‘fair price’ of an option. The theory was a gift to the trader, who had only to plug in appropriate numbers and get out the figure he or she should pay.

But there was just one element of the model that could not be readily specified: the market volatility, or how the market fluctuates. To calculate this, Black and Scholes assumed that the fluctuations were gaussian.

Not only do we know that this is not true, but it means that the Black-Scholes formula can produce nonsensical results: it suggests that option-writing can be conducted in a risk-free manner. This is a potentially disastrous message, imbuing a false sense of confidence that can lead to huge losses. The shortcoming arises from the erroneous assumption about market variability, showing that it matters very much in practical terms exactly how the fluctuations should be described.

The drawbacks of the Scholes-Black theory are known to economists, but they have failed to ameliorate them. Many extensions and modifications of the model have been proposed, yet none of them guarantees to remove the risks. It has been estimated that the deficiencies of such models account for up to 40 percent of the 1997 losses in derivatives trading, and it appears that in some cases traders’ rules of thumb do better than mathematically sophisticated models.”

Just a little reminder that, say what you will about the ‘econophysicists’ who are among those to be working on this issue, there are some rather important lacunae remaining in economic theory.

Saturday, January 26, 2008

Thursday, January 24, 2008

Scratchbuilt genomes

[Here’s the pre-edited version of my latest story for Nature’s online news. I discuss this work also in the BBC World Service’s Science in Action programme this week.]

By announcing the first chemical synthesis of a complete bacterial genome [1], scientists in the US have shown that the stage is now set for the creation of the first artificial organisms – something that looks likely to be achieved within the next year.

The genome of the pathogenic bacterium Mycoplasma genitalium, made in the laboratory by Hamilton Smith and his colleagues at the J. Craig Venter Institute in Rockville, Maryland, represents an increase by more than a factor of ten in the longest stretch of genetic material ever created by chemical means.

The complete genome of M. genitalium contains 582,970 of the fundamental building blocks of DNA, called nucleotide bases. Each of these was stitched in place by commercial DNA-synthesis companies according to the Venter Institute’s specifications, to make 101 separate segments of the genome. The scientists then used biotechnological methods to combine these fragments into a single genome within cells of E. coli bacteria and yeast.

M. genitalium has the smallest genome of any organism that can grow and replicate independently. (Viruses have smaller genomes, some of which have been synthesized before, but they cannot replicate on their own.) Its DNA contains the instructions for making just 485 proteins, which orchestrate the cells’ functions.

This genetic concision makes M. genitalium a candidate for the basis of a ‘minimal organism’, which would be stripped down further to contain the bare minimum of genes needed to survive. The Venter Institute team, which includes the institute’s founder, genomics pioneer Craig Venter, believe that around 100 of the bacterium’s genes could be jettisoned – but they don’t know which 100 these are.

The way to test that would be to make versions of the M. genitalium genome that lack some genes, and see whether it still provides a viable ‘operating system’ for the organism. Such an approach would also require a method for replacing a cell’s existing genome with a new, redesigned one. But Venter and his colleagues have already achieved such a ‘gene transplant’, which they reported last year between two bacteria closely related to M. genitalium [2].

Their current synthesis of the entire M. genitalium genome thus provides the other part of the puzzle. Chemical synthesis of DNA involves sequentially adding one of the four nucleotide bases to a growing chain in a specified sequence. The Venter Institute team farmed out this task to the companies Blue Heron Technology, DNA2.0 and GENEART.

But it is beyond the capabilities of the current techniques to join up all half a million or so bases in a single, continuous process. That was why the researchers ordered 101 fragments or ‘cassettes’, each of about 5000-7000 bases and with overlapping sequences that enabled them to be stuck together by enzymes.

To distinguish the synthetic DNA from the genomes of ‘wild’ M. genitalium, Smith and colleagues included ‘watermark’ sequences: stretches of DNA carrying a kind of barcode that designates its artificiality. These watermarks must be inserted at sites in the genome known to be able to tolerate such additions without their genetic function being impaired.

The researchers made one further change to the natural genome: they altered one gene in a way that was known to render M. genitalium unable to stick to mammalian cells. This ensured that cells carrying the artificial genome could not act as pathogens.

The cassettes were stitched together into strands that each contained a quarter of the total genome using DNA-linking enzymes within E. coli cells. But, for reasons that the researchers don’t yet understand, the final assembly of these quarter-genomes into a single circular strand didn’t run smoothly in the bacteria. So the team transferred them to cells of brewers’ yeast, in which the last steps of the assembly were carried out.

Smith and colleagues then extracted these synthetic genomes from the yeast cells, and used enzymes to chew up the yeast’s own DNA. They read out the sequences of the remaining DNA to check that these matched those of wild M. genitalium (apart from the deliberate modifications such as watermarks).

The ultimate evidence that the synthetic genomes are authentic copies, however, will be to show that cells can be ‘booted up’ when loaded with this genetic material. “This is the next step and we are working on it”, says Smith.

Advances in DNA synthesis might ultimately make this laborious stitching of fragments unnecessary, but Dorene Farnham, director of sales and marketing at Blue Heron in Bothell, Washington, stresses that that’s not a foregone conclusion. “The difficulty is not about length”, she says. “There are many other factors that go into getting these synthetic genes to survive in cells.”

Venter’s team hopes that a stripped-down version of the M. genitalium genome might serve as a general-purpose chassis to which might be added all sorts of useful designer functions, for example including genes that turn the bacteria into biological factories for making carbon-based ‘green’ fuels or hydrogen when fed with nutrients.

The next step towards that goal is to build potential minimal genomes from scratch, transplant them into Mycoplasma, and see if they will keep the cells alive. “We plan to start removing putative ‘non-essential’ genes and test whether we get viable transplants”, says Smith.

References

1. Gibson, D. G. et al. Science Express doi:10.1126/science.1151721 (2008).

2. Lartigue, C. et al. Science 317, 632 (2007).

[Here’s the pre-edited version of my latest story for Nature’s online news. I discuss this work also in the BBC World Service’s Science in Action programme this week.]

By announcing the first chemical synthesis of a complete bacterial genome [1], scientists in the US have shown that the stage is now set for the creation of the first artificial organisms – something that looks likely to be achieved within the next year.

The genome of the pathogenic bacterium Mycoplasma genitalium, made in the laboratory by Hamilton Smith and his colleagues at the J. Craig Venter Institute in Rockville, Maryland, represents an increase by more than a factor of ten in the longest stretch of genetic material ever created by chemical means.

The complete genome of M. genitalium contains 582,970 of the fundamental building blocks of DNA, called nucleotide bases. Each of these was stitched in place by commercial DNA-synthesis companies according to the Venter Institute’s specifications, to make 101 separate segments of the genome. The scientists then used biotechnological methods to combine these fragments into a single genome within cells of E. coli bacteria and yeast.

M. genitalium has the smallest genome of any organism that can grow and replicate independently. (Viruses have smaller genomes, some of which have been synthesized before, but they cannot replicate on their own.) Its DNA contains the instructions for making just 485 proteins, which orchestrate the cells’ functions.

This genetic concision makes M. genitalium a candidate for the basis of a ‘minimal organism’, which would be stripped down further to contain the bare minimum of genes needed to survive. The Venter Institute team, which includes the institute’s founder, genomics pioneer Craig Venter, believe that around 100 of the bacterium’s genes could be jettisoned – but they don’t know which 100 these are.

The way to test that would be to make versions of the M. genitalium genome that lack some genes, and see whether it still provides a viable ‘operating system’ for the organism. Such an approach would also require a method for replacing a cell’s existing genome with a new, redesigned one. But Venter and his colleagues have already achieved such a ‘gene transplant’, which they reported last year between two bacteria closely related to M. genitalium [2].

Their current synthesis of the entire M. genitalium genome thus provides the other part of the puzzle. Chemical synthesis of DNA involves sequentially adding one of the four nucleotide bases to a growing chain in a specified sequence. The Venter Institute team farmed out this task to the companies Blue Heron Technology, DNA2.0 and GENEART.

But it is beyond the capabilities of the current techniques to join up all half a million or so bases in a single, continuous process. That was why the researchers ordered 101 fragments or ‘cassettes’, each of about 5000-7000 bases and with overlapping sequences that enabled them to be stuck together by enzymes.

To distinguish the synthetic DNA from the genomes of ‘wild’ M. genitalium, Smith and colleagues included ‘watermark’ sequences: stretches of DNA carrying a kind of barcode that designates its artificiality. These watermarks must be inserted at sites in the genome known to be able to tolerate such additions without their genetic function being impaired.

The researchers made one further change to the natural genome: they altered one gene in a way that was known to render M. genitalium unable to stick to mammalian cells. This ensured that cells carrying the artificial genome could not act as pathogens.

The cassettes were stitched together into strands that each contained a quarter of the total genome using DNA-linking enzymes within E. coli cells. But, for reasons that the researchers don’t yet understand, the final assembly of these quarter-genomes into a single circular strand didn’t run smoothly in the bacteria. So the team transferred them to cells of brewers’ yeast, in which the last steps of the assembly were carried out.

Smith and colleagues then extracted these synthetic genomes from the yeast cells, and used enzymes to chew up the yeast’s own DNA. They read out the sequences of the remaining DNA to check that these matched those of wild M. genitalium (apart from the deliberate modifications such as watermarks).

The ultimate evidence that the synthetic genomes are authentic copies, however, will be to show that cells can be ‘booted up’ when loaded with this genetic material. “This is the next step and we are working on it”, says Smith.

Advances in DNA synthesis might ultimately make this laborious stitching of fragments unnecessary, but Dorene Farnham, director of sales and marketing at Blue Heron in Bothell, Washington, stresses that that’s not a foregone conclusion. “The difficulty is not about length”, she says. “There are many other factors that go into getting these synthetic genes to survive in cells.”

Venter’s team hopes that a stripped-down version of the M. genitalium genome might serve as a general-purpose chassis to which might be added all sorts of useful designer functions, for example including genes that turn the bacteria into biological factories for making carbon-based ‘green’ fuels or hydrogen when fed with nutrients.

The next step towards that goal is to build potential minimal genomes from scratch, transplant them into Mycoplasma, and see if they will keep the cells alive. “We plan to start removing putative ‘non-essential’ genes and test whether we get viable transplants”, says Smith.

References

1. Gibson, D. G. et al. Science Express doi:10.1126/science.1151721 (2008).

2. Lartigue, C. et al. Science 317, 632 (2007).

Tuesday, January 22, 2008

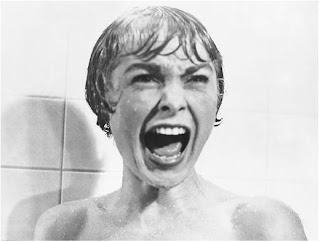

Differences in the shower

[This is how my latest article for Nature’s Muse column started out. Check out also a couple of interesting papers in the latest issue of Phys. Rev. E: a study of how ‘spies’ affect the minority game, and a look at the value of diversity in promoting cooperation in the spatial Prisoner’s Dilemma.]

A company sets out to hire a 20-person team to solve a tricky problem, and has a thousand applicants to choose from. So they set them all a test related to the problem in question. Should they then pick the 20 people who do best? That sounds like a no-brainer, but there situations in which it would be better to hire 20 of the applicants at random.

This scenario was presented four years ago by social scientists Lu Hong and Scott Page of the University of Michigan [1] as an illustration of the value of diversity in human groups. It shows that many different minds are sometimes more effective than many ‘expert’ minds. The drawback of having a team composed of the ‘best’ problem-solvers is that they are likely all to think in the same way, and so are less likely to come up with versatile, flexible solutions. “Diversity”, said Hong and Page, “trumps ability.”

Page believes that studies like this, which present mathematical models of decision-making, show that initiatives to encourage cultural diversity in social, academic and institutional settings are not just exercises in politically correct posturing. To Page, they are ways of making the most of the social capital that human difference offers.

There are evolutionary analogues to this. Genetic diversity in a population confers robustness in the face of a changing environment, whereas a group of almost identical ‘optimally adapted’ organisms can come to grief when the wind shifts. Similarly, sexual reproduction provides healthy variety in our own genomes, while in ecology monocultures are notoriously fragile in the face of new threats.

But it’s possible to overplay the diversity card. Expert opinion, literary and artistic canons, and indeed the whole concept of ‘excellence’ have become fashionable whipping boys to the extent that some, particularly in the humanities, worry about standards and judgement vanishing in a deluge of relativist mediocrity. Of course it is important to recognize that diversity does not have to mean ‘anything goes’ (a range of artistic styles does not preclude discrimination of good from bad within each of them) – but that’s often what sceptics of the value of ‘diversity’ fear.

This is why models like that of Hong and Page bring some valuable precision to the questions of what diversity is and why and when it matters. That issue now receives a further dose of enlightenment from a study that looks, at face value, to be absurdly whimsical.

Economist Christina Matzke and physicist Damien Challet have devised a mathematical model of (as they put it) “taking a shower in youth hostels” [2]. Among the risks of budget travel, few are more hazardous than this. If you try to have a shower at the same time as everyone else, it’s a devil of a job adjusting the taps to get the right water temperature.

The problem, say Matzke and Challet, is that in the primitive plumbing systems of typical hostels, one person changing their shower temperature settings alters the balance of hot and cold water for everyone else too. They in turn try to retune the settings to their own comfort, with the result that the shower temperatures fluctuate wildly between scalding and freezing. Under what conditions, they ask, can everyone find a mutually acceptable compromise, rather than all furiously altering their shower controls while cursing the other guests?

So far, so amusing. But is this really such a (excuse me) burning issue? Challet’s previous work provides some kind of answer to that. Several years ago, he and physicist Yi-Cheng Zhang devised the so-called minority game as a model for human decision-making [3]. They took their lead from economist Brian Arthur, who was in the habit of frequenting a bar called El Farol in the town of Santa Fe where he worked [4]. The bar hosted an Irish music night on Thursdays which was often so popular that the place would be too crowded for comfort.

Noting this, some El Farol clients began staying away on Irish nights. That was great for those who did turn up – but once word got round that things were more comfortable, overcrowding resumed. In other words, attendance would fluctuate wildly, and the aim was to go only on those nights when you figured others would stay away.

But how do you know which nights those are? You don’t, of course. Human nature, however, prompts us to think we can guess. Maybe low attendance one week means high attendance the next? Or if it’s been busy three weeks in a row, the next is sure to be quiet? The fact is that there’s no ‘best’ strategy – it depends on what strategies other use.

The point of the El Farol problem, which Challet and Zhang generalized, is to be in the minority: to stay away when most others go, and vice versa. The reason why this is not a trivial issue is that the minority game serves as a proxy for many social situations, from lane-changing in heavy traffic to choosing your holiday destination. It is especially relevant in economics: in a buyer’s market, for example, it pays to be a seller. It’s unlikely that anyone decided whether or not to go to El Farol by plotting graphs and statistics, but market traders certainly do so, hoping to tease out trends that will enable them to make the best decisions. Each has a preferred strategy.

The maths of the minority game looks at how such strategies affect one another, how they evolve and how the ‘agents’ playing the game learn from experience. I once played it in an interactive lecture in which push-button voting devices were distributed to the audience, who were asked to decide in each round whether to be in group A or group B. (The one person who succeeded in being in the minority in all of several rounds said that his strategy was to switch his vote from one group to the other “one round later than it seemed common sense to do so.”)

So what about the role of diversity? Challet’s work showed that the more mixed the strategies of decision-making are, the more reliably the game settles down to the optimal average size of the majority and minority groups. In other words, attendance at El Farol doesn’t in that case fluctuate so much from one week to the next, and is usually close to capacity.

The Shower Temperature Problem is very different, because in principle the ideal situation, where everyone gets closest to their preferred temperature, happens when they all set their taps in the same way – that is, they all use the same strategy. However, this solution is unstable – the slightest deviation, caused by one person trying to tweak the shower settings to get a bit closer to the ideal, sets off wild oscillations in temperature as others respond.

In contrast, when there is a diversity of strategies – agents use a range of tap settings in an attempt to hit the desired water temperature – then these oscillations are suppressed and the system converges more reliably to an acceptable temperature for all. But there’s a price paid for that stability. While overall the water temperature doesn’t fluctuate strongly, individuals may find they have to settle for a temperature further from the ideal value than they would in the case of identical shower settings.

This problem is representative of any in which many agents try to obtain equal amounts of some fixed quantity that is not necessarily abundant enough to satisfy them all completely – factories or homes competing for energy in a power grid, perhaps. But more generally, the model of Matzke and Challet shows how diversity in decision-making may fundamentally alter the collective outcome. That may sound obvious, but don’t count on it. Conventional economic models have for decades stubbornly insisted on making all their agents identical. They are ‘representative’ – one size fits all – and they follow a single ‘optimal’ strategy that maximizes their gains.

There’s a good reason for this assumption: the models are very hard to solve otherwise. But there’s little point in having a tractable model if it doesn’t come close to describing reality. The static view of a ‘representative’ agent leads to the prediction of an ‘equilibrium’ economy, rather like the equilibrium shower system of Matzke and Challet’s homogeneous agents. Anyone contemplating the current world economy knows all too well what a myth this equilibrium is – and how real-world behaviour is sure to depend on the complex mix of beliefs that economic agents hold about the future and how to deal with it.

More generally, the Shower Temperature Problem offers another example of how difference and diversity can improve the outcome of group decisions. Encouraging diversity is not then about being liberal or tolerant (although it tends to require both) but about being rational. Perhaps the deeper challenge for human societies, and the one that underpins current debates about multiculturalism, is how to cope with differences not in problem-solving strategies but in the question of what the problems are and what the desired solutions should be.

References

1. Hong, L. & Page, S. E. Proc. Natl Acad. Sci. USA 101, 16385 (2004).

2. Matzke, C. & Challet, D. preprint http://www.arxiv.org/abs/0801.1573 (2008).

3. Arthur, B. W. Am. Econ. Assoc. Papers & Proc. 84, 406. (1994).

4. Challet, D. & Zhang, Y.-C. Physica A 246, 407 (1997).

Wednesday, January 16, 2008

Groups, glaciation and the pox

[This is the pre-edited version of my Lab Report column for the February issue of Prospect.]

Blaming America for the woes of the world is an old European habit. Barely three decades after Columbus’s crew returned from the New World, a Spanish doctor claimed they brought back the new disease that was haunting Europe: syphilis, so named in the 1530s by the Italian Girolamo Fracastoro. All social strata were afflicted: kings, cardinals and popes suffered alongside soldiers, although sexual promiscuity was so common that the venereal nature of the disease took time to emerge. Treatments were fierce and of limited value: inhalations of mercury vapour had side-effects as bad as the symptoms, while only the rich could afford medicines made from guaiac wood imported from the West Indies.

But it became fashionable during the twentieth century to doubt the New World origin of syphilis: perhaps the disease was a dormant European one that acquired new virulence during the Renaissance? Certainly, the bacterial spirochete Treponema pallidum (subspecies pallidum) that causes syphilis is closely related to other ‘treponemal’ pathogens, such as that which causes yaws in hot, humid regions like the Congo and Indonesia. Most of these diseases leave marks on the skeleton and so can be identified in human remains. They are seen widely in New World populations dating back thousands of years, but reported cases of syphilis-like lesions in Old World remains before Columbus have been ambiguous.

Now a team of scientists in Atlanta, Georgia, has analysed the genetics of many different strains of treponemal bacteria to construct an evolutionary tree that not only identifies how venereal syphilis emerged but shows where in the world its nearest genetic relatives are found. This kind of ‘molecular phylogenetics’, which builds family trees not from a traditional comparison of morphologies but by comparing gene sequences, has revolutionized palaeonotology, and it works as well for viruses and bacteria as it does for hominids and dinosaurs. The upshot is that T. pallidum subsp. pallidum is more closely related to a New World subspecies than it is to Old World strains. In other words, it looks as though the syphilis spirochete indeed mutated from an American progenitor. That doesn’t quite imply that Columbus’s sailors brought syphilis back with them, however – it’s also possible that they carried a non-venereal form that quickly mutated into the sexually transmitted disease on its arrival. Given that syphilis was reported within two years of Columbus’s landing in Spain, that would have been a quick change.

****

Having helped to bury the notion of group selection in the 1970s, Harvard biologist E. O. Wilson is now attempting to resurrect it. He has a tough job on his hands; most evolutionary biologists have firmly rejected this explanation for altruism, and Richard Dawkins has called Wilson’s new support for group selection a ‘weird infatuation’ that is ‘unfortunate in a biologist who is so justly influential.’

The argument is all about why we are (occasionally) nice to one another, rather than battling, red in tooth and claw, for limited resources. The old view of group selection said simply that survival prospects may improve if organisms act collectively rather than individually. Human altruism, with its framework of moral and social imperatives, is murky territory for such questions, but cooperation is common enough in the wild, particularly in eusocial insects such as ants and bees. Since the mid-twentieth century such behaviour has been explained not by vague group selection but via kin selection: by helping those genetically related to us, we propagate our genes. It is summed up in the famous formulation of J. B. S. Haldane that he would lay down his life for two brothers or eight cousins – a statement of the average genetic overlaps that make the sacrifice worthwhile. Game theory now offers versions of altruism that don’t demand kinship – cooperation of non-relatives can also be to mutual benefit – but kin selection remains the dominant explanation for eusociality.

That was the position advocated by Wilson in his 1975 book Sociobiology. In a forthcoming book The Superorganism, and a recent paper, he now reverses this claim and says that kin selection may not be all that important. What matters, he says, is that a population possess genes that predispose the organisms to flexible behavioural choices, permitting a switch from competitive to cooperative action in ‘one single leap’ when the circumstances make it potentially beneficial.

Wilson cites a lack of direct, quantitative evidence for kin selection, although others have disputed that criticism. In the end the devil is in the details – specifically in the maths of how much genetic common ground a group needs to make self-sacrifice pay – and it’s not clear that either camp yet has the numbers to make an airtight case.

****

The discovery of ice sheets half the size of today’s Antarctic ice cap during the ‘super-greenhouse’ climate of the Turonian stage, 93.5-89.3 million years ago, seems to imply that we need not fret about polar melting today. With atmospheric greenhouse gas levels 3-10 times higher than now, ocean temperatures around 5 degC warmer, and crocodiles swimming in the Arctic, the Turonian sounds like the IPCC’s worst nightmare. But it’s not at all straightforward to extrapolate between then and now. More intense circulation of water in the atmosphere could have left thick glaciers on the high mountains and plateaus of Antarctica even in those torrid times. In any event, a rather particular set of climatic circumstances seems to have been at play – the glaciation does not persist throughout the warm Cretaceous period. And it is always important to remember that, with climate, where you end up tends to depend on where you started from.

[This is the pre-edited version of my Lab Report column for the February issue of Prospect.]

Blaming America for the woes of the world is an old European habit. Barely three decades after Columbus’s crew returned from the New World, a Spanish doctor claimed they brought back the new disease that was haunting Europe: syphilis, so named in the 1530s by the Italian Girolamo Fracastoro. All social strata were afflicted: kings, cardinals and popes suffered alongside soldiers, although sexual promiscuity was so common that the venereal nature of the disease took time to emerge. Treatments were fierce and of limited value: inhalations of mercury vapour had side-effects as bad as the symptoms, while only the rich could afford medicines made from guaiac wood imported from the West Indies.

But it became fashionable during the twentieth century to doubt the New World origin of syphilis: perhaps the disease was a dormant European one that acquired new virulence during the Renaissance? Certainly, the bacterial spirochete Treponema pallidum (subspecies pallidum) that causes syphilis is closely related to other ‘treponemal’ pathogens, such as that which causes yaws in hot, humid regions like the Congo and Indonesia. Most of these diseases leave marks on the skeleton and so can be identified in human remains. They are seen widely in New World populations dating back thousands of years, but reported cases of syphilis-like lesions in Old World remains before Columbus have been ambiguous.

Now a team of scientists in Atlanta, Georgia, has analysed the genetics of many different strains of treponemal bacteria to construct an evolutionary tree that not only identifies how venereal syphilis emerged but shows where in the world its nearest genetic relatives are found. This kind of ‘molecular phylogenetics’, which builds family trees not from a traditional comparison of morphologies but by comparing gene sequences, has revolutionized palaeonotology, and it works as well for viruses and bacteria as it does for hominids and dinosaurs. The upshot is that T. pallidum subsp. pallidum is more closely related to a New World subspecies than it is to Old World strains. In other words, it looks as though the syphilis spirochete indeed mutated from an American progenitor. That doesn’t quite imply that Columbus’s sailors brought syphilis back with them, however – it’s also possible that they carried a non-venereal form that quickly mutated into the sexually transmitted disease on its arrival. Given that syphilis was reported within two years of Columbus’s landing in Spain, that would have been a quick change.

****

Having helped to bury the notion of group selection in the 1970s, Harvard biologist E. O. Wilson is now attempting to resurrect it. He has a tough job on his hands; most evolutionary biologists have firmly rejected this explanation for altruism, and Richard Dawkins has called Wilson’s new support for group selection a ‘weird infatuation’ that is ‘unfortunate in a biologist who is so justly influential.’

The argument is all about why we are (occasionally) nice to one another, rather than battling, red in tooth and claw, for limited resources. The old view of group selection said simply that survival prospects may improve if organisms act collectively rather than individually. Human altruism, with its framework of moral and social imperatives, is murky territory for such questions, but cooperation is common enough in the wild, particularly in eusocial insects such as ants and bees. Since the mid-twentieth century such behaviour has been explained not by vague group selection but via kin selection: by helping those genetically related to us, we propagate our genes. It is summed up in the famous formulation of J. B. S. Haldane that he would lay down his life for two brothers or eight cousins – a statement of the average genetic overlaps that make the sacrifice worthwhile. Game theory now offers versions of altruism that don’t demand kinship – cooperation of non-relatives can also be to mutual benefit – but kin selection remains the dominant explanation for eusociality.

That was the position advocated by Wilson in his 1975 book Sociobiology. In a forthcoming book The Superorganism, and a recent paper, he now reverses this claim and says that kin selection may not be all that important. What matters, he says, is that a population possess genes that predispose the organisms to flexible behavioural choices, permitting a switch from competitive to cooperative action in ‘one single leap’ when the circumstances make it potentially beneficial.

Wilson cites a lack of direct, quantitative evidence for kin selection, although others have disputed that criticism. In the end the devil is in the details – specifically in the maths of how much genetic common ground a group needs to make self-sacrifice pay – and it’s not clear that either camp yet has the numbers to make an airtight case.

****

The discovery of ice sheets half the size of today’s Antarctic ice cap during the ‘super-greenhouse’ climate of the Turonian stage, 93.5-89.3 million years ago, seems to imply that we need not fret about polar melting today. With atmospheric greenhouse gas levels 3-10 times higher than now, ocean temperatures around 5 degC warmer, and crocodiles swimming in the Arctic, the Turonian sounds like the IPCC’s worst nightmare. But it’s not at all straightforward to extrapolate between then and now. More intense circulation of water in the atmosphere could have left thick glaciers on the high mountains and plateaus of Antarctica even in those torrid times. In any event, a rather particular set of climatic circumstances seems to have been at play – the glaciation does not persist throughout the warm Cretaceous period. And it is always important to remember that, with climate, where you end up tends to depend on where you started from.

Friday, January 11, 2008

In praise of wrong ideas

[This is my latest column for Chemistry World, and explains what I got up to on Monday night. I’m not sure when the series is being broadcast – this was the first to be recorded. It’s an odd format, and I’m not entirely sure it works, or at least, not yet. Along with Jonathan Miller, my fellow guest was mathematician Marcus de Sautoy. Jonathan chose to submit the Nottingham Alabasters (look it up – interesting stuff), and Marcus the odd symmetry group called the Monster.]

I can’t say that I’d expected to find myself defending phlogiston, let alone in front of a comedy audience. But I wasn’t angling for laughs. I was aiming to secure a place for phlogiston in the ‘Museum of Curiosities’, an institution that exists only in the ethereal realm of BBC’s Radio 4. In a forthcoming series of the same name, panellists submit an item of their choice to the museum, explaining why it deserves a place. The show will have laughs – the curator is the British comedian Bill Bailey – but actually it needn’t. The real aim is to spark discussion of the issues that each guest’s choice raises. For phlogiston, there are plenty of those.

What struck me most during the recording was how strongly the old historiographic image of phlogiston still seems to hold sway. In 1930 the chemical popularizer Bernard Jaffe wrote that phlogiston, which he attributed to the alchemist Johann Becher, ‘nearly destroyed the progress of chemistry’, while in 1957 the science historian John Read called it a ‘theory of unreason.’ Many of us doubtless encountered phlogiston in derisive terms during our education, which is perhaps why it is forgivable that the programme’s producers wanted to know of ‘other scientific theories from the past that look silly today’. But even the esteemed science communicator, the medical doctor Jonathan Miller (who was one of my co-panellists), spoke of the ‘drivel’ of the alchemists and suggested that natural philosophers of earlier times got things like this wrong because they ‘didn’t think smartly enough’.

I feel this isn’t the right way to think about phlogiston. Yes, it had serious problems even from the outset, but that was true of just about any fundamental chemical theory of the time, Lavoisier’s oxygen included. Phlogiston also had a lot going for it, not least because it unified a wealth of observations and phenomena. Arguably it was the first overarching chemical theory with a recognizably modern character, even if the debts to ancient and alchemical theories of the elements remained clear.

Phlogiston was in fact named in 1718 by Georg Stahl, professor of medicine at the University of Halle, who derived it from the Greek phlogistos, to set on fire. But Stahl took the notion from Becher’s terra pinguis or fatty earth, one of three types of ‘earth’ that Becher designated as ‘principles’ responsible for mineral formation. Becher’s ‘earths’ were themselves a restatement of the alchemical principles sulphur, mercury and salt proposed as the components of all things by Paracelsus. Terra pinguis was the principle of combustibility – it was abundant in oily or sulphurous substances.

The idea, then, was that phlogiston made things burn. When wood or coal was ignited, its phlogiston was lost to the air, which was why its mass decreases. Combustion ceases when air is saturated in phlogiston. One key problem, noted but not explained by Stahl, was that metals don’t lose but gain weight when combusted. This is often a source of modern scorn, for it led later scientists to contorted explanations such as that phlogiston buoyed up heavier substances, or (sometimes) had negative weight. Those claims prompted Lavoisier ultimately to denounce phlogiston as a ‘veritable Proteus’ that ‘adapts itself to all the explanations for which it may be required.’ But actually it was not always clear whether metals did gain weight when burnt, for the powerful lenses used for heating them could sublimate the oxides.

In any event, phlogiston explained not only combustion but also acidity, respiration, chemical reactivity, and the growth and properties of plants. As Oliver Morton points out in his new book Eating the Sun (Fourth Estate), the Scottish geologist James Hutton invoked a ‘phlogiston cycle’ analogous to the carbon and energy cycles of modern earth scientists, in which phlogiston was a kind of fixed sunlight taken up by plants, some of which is buried in the deep earth as coal and which creates a ‘constant fire in the mineral regions’ that powers volcanism.

So phlogiston was an astonishingly fertile idea. The problem was not that it was plain wrong, but that it was so nearly right – it was the mirror image of the oxygen theory – that it could not easily be discredited. And indeed, that didn’t happen as cleanly and abruptly as implied in conventional accounts of the Chemical Revolution – as Hasok Chang at University College London has explained, phlogistonists persisted well into the nineteenth century, and even eminent figures such as Humphry Davy were sceptical of Lavoisier.

This is one of the reasons I chose phlogiston for the museum – it reminds us of our ahistorical tendency to clean up science history in retrospect, and to divide people facilely into progressives and conservatives. It also shows that the opposite of a good idea can also be a good idea. And it reminds us that science is not about being right but being a little less wrong. I’m sure that one day the dark matter and dark energy of cosmologists will look like phlogiston does now: not silly ideas, but ones that we needed until something better came along.

[This is my latest column for Chemistry World, and explains what I got up to on Monday night. I’m not sure when the series is being broadcast – this was the first to be recorded. It’s an odd format, and I’m not entirely sure it works, or at least, not yet. Along with Jonathan Miller, my fellow guest was mathematician Marcus de Sautoy. Jonathan chose to submit the Nottingham Alabasters (look it up – interesting stuff), and Marcus the odd symmetry group called the Monster.]

I can’t say that I’d expected to find myself defending phlogiston, let alone in front of a comedy audience. But I wasn’t angling for laughs. I was aiming to secure a place for phlogiston in the ‘Museum of Curiosities’, an institution that exists only in the ethereal realm of BBC’s Radio 4. In a forthcoming series of the same name, panellists submit an item of their choice to the museum, explaining why it deserves a place. The show will have laughs – the curator is the British comedian Bill Bailey – but actually it needn’t. The real aim is to spark discussion of the issues that each guest’s choice raises. For phlogiston, there are plenty of those.

What struck me most during the recording was how strongly the old historiographic image of phlogiston still seems to hold sway. In 1930 the chemical popularizer Bernard Jaffe wrote that phlogiston, which he attributed to the alchemist Johann Becher, ‘nearly destroyed the progress of chemistry’, while in 1957 the science historian John Read called it a ‘theory of unreason.’ Many of us doubtless encountered phlogiston in derisive terms during our education, which is perhaps why it is forgivable that the programme’s producers wanted to know of ‘other scientific theories from the past that look silly today’. But even the esteemed science communicator, the medical doctor Jonathan Miller (who was one of my co-panellists), spoke of the ‘drivel’ of the alchemists and suggested that natural philosophers of earlier times got things like this wrong because they ‘didn’t think smartly enough’.

I feel this isn’t the right way to think about phlogiston. Yes, it had serious problems even from the outset, but that was true of just about any fundamental chemical theory of the time, Lavoisier’s oxygen included. Phlogiston also had a lot going for it, not least because it unified a wealth of observations and phenomena. Arguably it was the first overarching chemical theory with a recognizably modern character, even if the debts to ancient and alchemical theories of the elements remained clear.

Phlogiston was in fact named in 1718 by Georg Stahl, professor of medicine at the University of Halle, who derived it from the Greek phlogistos, to set on fire. But Stahl took the notion from Becher’s terra pinguis or fatty earth, one of three types of ‘earth’ that Becher designated as ‘principles’ responsible for mineral formation. Becher’s ‘earths’ were themselves a restatement of the alchemical principles sulphur, mercury and salt proposed as the components of all things by Paracelsus. Terra pinguis was the principle of combustibility – it was abundant in oily or sulphurous substances.

The idea, then, was that phlogiston made things burn. When wood or coal was ignited, its phlogiston was lost to the air, which was why its mass decreases. Combustion ceases when air is saturated in phlogiston. One key problem, noted but not explained by Stahl, was that metals don’t lose but gain weight when combusted. This is often a source of modern scorn, for it led later scientists to contorted explanations such as that phlogiston buoyed up heavier substances, or (sometimes) had negative weight. Those claims prompted Lavoisier ultimately to denounce phlogiston as a ‘veritable Proteus’ that ‘adapts itself to all the explanations for which it may be required.’ But actually it was not always clear whether metals did gain weight when burnt, for the powerful lenses used for heating them could sublimate the oxides.

In any event, phlogiston explained not only combustion but also acidity, respiration, chemical reactivity, and the growth and properties of plants. As Oliver Morton points out in his new book Eating the Sun (Fourth Estate), the Scottish geologist James Hutton invoked a ‘phlogiston cycle’ analogous to the carbon and energy cycles of modern earth scientists, in which phlogiston was a kind of fixed sunlight taken up by plants, some of which is buried in the deep earth as coal and which creates a ‘constant fire in the mineral regions’ that powers volcanism.

So phlogiston was an astonishingly fertile idea. The problem was not that it was plain wrong, but that it was so nearly right – it was the mirror image of the oxygen theory – that it could not easily be discredited. And indeed, that didn’t happen as cleanly and abruptly as implied in conventional accounts of the Chemical Revolution – as Hasok Chang at University College London has explained, phlogistonists persisted well into the nineteenth century, and even eminent figures such as Humphry Davy were sceptical of Lavoisier.

This is one of the reasons I chose phlogiston for the museum – it reminds us of our ahistorical tendency to clean up science history in retrospect, and to divide people facilely into progressives and conservatives. It also shows that the opposite of a good idea can also be a good idea. And it reminds us that science is not about being right but being a little less wrong. I’m sure that one day the dark matter and dark energy of cosmologists will look like phlogiston does now: not silly ideas, but ones that we needed until something better came along.

Subscribe to:

Comments (Atom)